Représentations musicales

Ces travaux mènent à des applications dans les domaines de la composi- tion assistée par ordinateur (CAO), de la performance, de l’improvisation, de l’interprétation et de la musicologie computationnelle. La réflexion sur les représentations de haut niveau des concepts et des structures musicales, appuyée sur les langages informatiques originaux développés par l’équipe, débouche sur l’implantation de modèles qui peuvent se tourner vers l’analyse musicale comme vers la création, vers la composition comme la performance et l’improvisation.

Sur le versant musicologique, les outils de représentation et de modélisation permettent une approche véritablement expérimentale qui dynamise de manière significative cette discipline.

Sur le versant création, l’objectif est de concevoir des compagnons musicaux qui interagissent avec les compositeurs, musiciens, ingénieurs du son… dans toutes les phases du workflow musical. Les logiciels développés ont pu être diffusés vers une communauté importante de musiciens, concrétisant des formes de pensée originales liées à cette caractéristique particulière des supports informatiques qu’ils peuvent représenter (et exécuter) à la fois la partition finale, ses divers niveaux d’élaboration formelle, ses générateurs algorithmiques, ses réalisations sonores et qu'ils permettent d'interagir dans le vif de la performance et notamment de l'improvisation.

L’équipe intègre l’interaction symbolique et la créativité artificielle à travers ses travaux sur la modélisation de l’improvisation et l’intégration de nouvelles formes compositionnelles ouvertes et dynamiques. Ces recherches permettent des avancées en intelligence artificielle, avec des modèles d’écoute, d’apprentissage génératif, de synchronisation, et posent les fondements de nouvelles technologies d’agents créatifs qui peuvent devenir des compagnons musicaux dotés de musicalité artificielle (« machine musicianship »). Cela préfigure la dynamique de coopération inhérente aux réseaux cyber-humains et l’émergence de formes de co-créativité homme-machine.

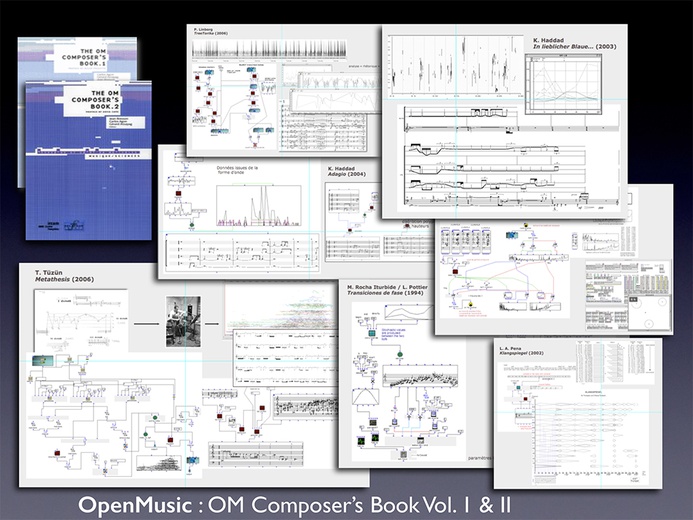

L’équipe a une longue histoire de collaboration intensive avec des compositeurs et musiciens, qu’ils soient internes ou externes à l’Ircam. Les trois tomes de l’ouvrage OM Composer’s Book archivent ces travaux et garantissent leur diffusion internationale et leur pérennité.

Principales thématiques

- Composition assistée : Composition assistée par ordinateur, logiciel OpenMusic ; Antescofo

- Orchestration : Orchestration assistée par ordinateur ; projet ACTOR ; logiciel Orchid*

- Contrôle de la synthèse et de la spatialisation, écriture du temps :

- Mathématique et musique

- Langages informatiques pour la création : logiciels OpenMusic ; Antescofo

- Dynamiques de l’interaction improvisée, co-créativité : projets DYCI2 ; MERCI ; REACH ; Logiciel Somax2

- Intelligence Artificielle Créative : projet ACIDS

- Études sur les structures musicales dans la performance : projet COSMOS

- Études en lien avec la thérapeutique : projet HEART.FM

Domaine de compétence

{Composition, analyse, performance, improvisation, orchestration} assistés par ordinateur, musicologie computationnelle, intelligence artificielle créative, langages informatiques musicaux, mathématiques musicales, langages temps réel synchrones, notations exécutables, architectures d’interaction, (co-)créativité computationnelle.

Premier collage de l'identité du logiciel OpenMusic par A. Mohsen © Philippe Barbosa

Premier collage de l'identité du logiciel OpenMusic par A. Mohsen © Philippe Barbosa Mederic Collignon improvise en concert avec le logiciel OMax (RIM B. Lévy)

Mederic Collignon improvise en concert avec le logiciel OMax (RIM B. Lévy) Moreno Andreatta, chercheur CNRS-Ircam dans son bureau © Philippe Barbosa

Moreno Andreatta, chercheur CNRS-Ircam dans son bureau © Philippe Barbosa OM Composer's Book

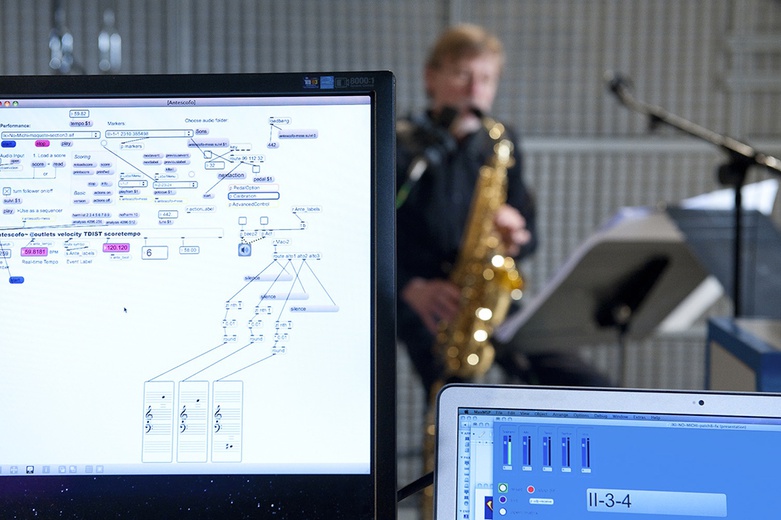

OM Composer's Book Claude Delangle en studio avec le logiciel Antescofo © Inria / H. Raguet

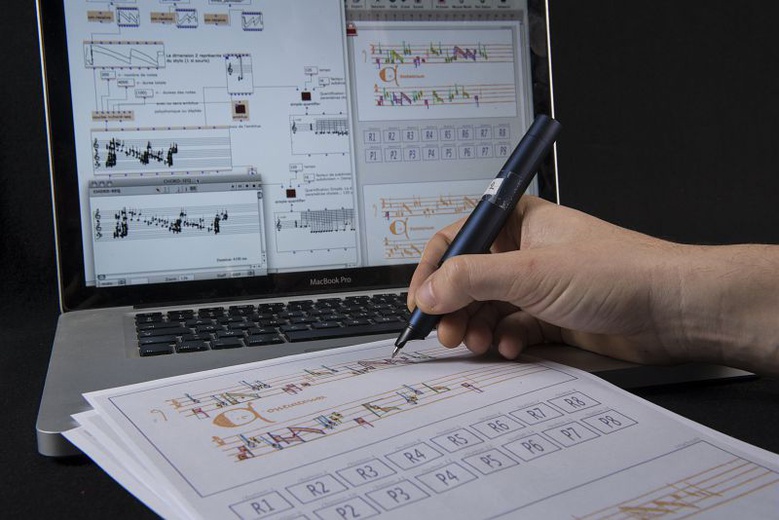

Claude Delangle en studio avec le logiciel Antescofo © Inria / H. Raguet Papier Intelligent, écriture de l’espace dans la composition © Inria

Papier Intelligent, écriture de l’espace dans la composition © Inria

Domaines de compétence

Composition et analyse assistées par ordinateur, musicologie computationnelle, musicologie cognitive, intelligence artificielle, langages informatiques, apprentissage automatique, méthodes algébriques et géométriques, interactions symboliques, langage temps réel synchrone et temporisé, notations exécutables.

Domaines de recherche et projets associés

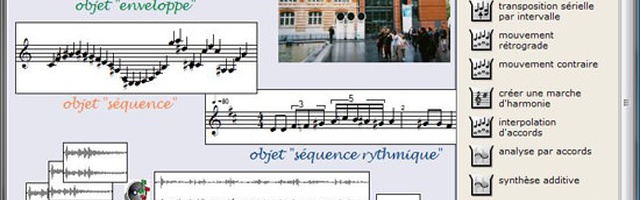

Composition assistée par ordinateur : écriture du son, du temps et de l'espace

Concevoir des modèles informatiques adaptés aux processus de création, intégrant paradigmes de calcul, interactions et représentations musicales

Mathématiques et musique

Modèles algébriques, topologiques et catégoriels en musicologie computationnelle

Orchestration assistée par ordinateur

Orchestration par la recherche automatique d’instrumentations et de superpositions d’instruments approchant une cible définie par le compositeur

Somax2

Somax 2 is an application for musical improvisation and composition. It is implemented in Max and is based on a generative model using a process similar to concatenative synthesis to provide stylistically coherent improvisation, while listening to and adapting to a musician (or any other type of audio or MIDI source) in real-time. The model is operating in the symbolic domain and is trained on a musical corpus, consisting of one or multiple MIDI files, from which it draws its material used for improvisation. The model can be used with little configuration to autonomously interact with a musician, but it also allows manual control of its generative process, effectively letting the model serve as an instrument that can be played in its own right.

Projets nationaux et européens

ACIDS

Intelligence Artificielle Créative et Data Science

ACTOR

Analyse, Création et Pédagogie de l'Orchestration

REACH

Raising Co-Creativity in Cyber-Human Musicianship

ACTOR

Analyse, Création et Pédagogie de l'Orchestration

COSMOS

Computational Shaping and Modeling of Musical Structures

DAFNE+

Decentralized platform for fair creative content distribution empowering creators and communities through new digital distribution models based on digital tokens

Heart.FM

Maximizing the Therapeutic Potential of Music through Tailored Therapy with Physiological Feedback in Cardiovascular Disease

MERCI

Réalité Musicale Mixte avec Instruments Créatifs

Logiciels (Conception & développement)

OpenMusic

Antescofo

OMax & co

Orchid*

Musique Lab 2

Équipe

Responsable d'équipe : Gerard Assayag

Chercheur.e.s & ingénieur.e.s : Mikhail Malt, Carlos Agon Amado, Jean-Louis Giavitto, Karim Haddad, José Miguel Fernandez, Moreno Andreatta, Philippe Esling

Directrice de l'UMR :

Administrative : Vasiliki Zachari

Compositeur : Claudy Malherbe

Doctorant.e.s : Hector TEYSSIER, Romain BUGUET, Sébastien LI, Marco Fiorini, , Yohann Rabearivelo, David Genova, Nils Demerlé, Orian Sharoni

Chercheur.se.s associé.e.s : Georges Bloch

Collaborations

Bergen Center for Electronic Arts (Norvège), CIRMMT/McGill University (Canada), City University London, CNSMDP, Columbia New York, CNMAT/UC Berkeley, Electronic Music Foundation, Gmem, Grame Lyon, École normale supérieure Paris, EsMuC Barcelone, Harvard University, Inria, IReMus – Sorbonne Paris-4, Jyvaskyla University, univ. de Bologne, USC Los Angeles, université Marc Bloch Strasbourg, Pontificad Javeriana Cali, université Paris-Sud Orsay, université de Pise, UPMC Paris, UCSD San Diego, Yale, U. Minnesota, U. Washington.