Espaces acoustiques et cognitifs

Les disciplines scientifiques de l’équipe sont le traitement du signal et l’acoustique pour l’élaboration de techniques de reproduction audio spatialisée et de méthodes d’analyse/synthèse du champ sonore. Parallèlement, l’équipe consacre un important volet d’études cognitives sur l’intégration multisensorielle pour un développement raisonné de nouvelles médiations sonores basées sur l’interaction corps/audition/espace. Les activités de recherche scientifique décrites ci-dessous s’articulent avec une activité de développement de bibliothèques logicielles. Ces développements consignent le savoir-faire de l’équipe, soutiennent son activité de recherche théorique et expérimentale et sont le vecteur majeur de notre relation avec la création musicale et d’autres secteurs applicatifs.

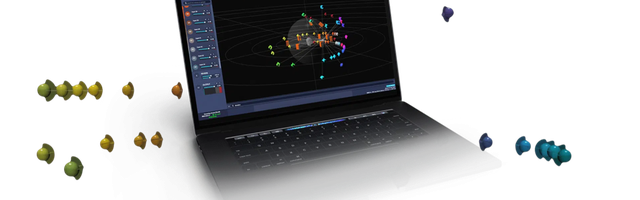

Les travaux concernant les techniques de spatialisation se concentrent sur les modèles basés sur un formalisme physique du champ sonore. L’objectif principal est le développement d’un cadre formel d’analyse/synthèse du champ sonore exploitant des réponses impulsionnelles spatialisées (SRIR pour Spatial Room Impulse Response). Les SRIRs sont généralement mesurées par des réseaux sphériques comportant plusieurs dizaines de transducteurs (microphones et/ou haut-parleurs). L’application principale concerne le développement de réverbérateurs à convolution exploitant ces SRIRs à haute résolution spatiale afin de reproduire fidèlement la complexité du champ sonore.

La technique de spatialisation binaurale sur casque retient également notre attention. L’évolution des pratiques d’écoute et la démocratisation des applications interactives tendent à privilégier l’écoute sur casque à travers l’usage des smartphones. Grâce à sa capacité d’immersion sonore, l’écoute binaurale devient le premier vecteur d’écoute tridimensionnelle. Basée sur l’exploitation des fonctions de transfert d’oreille (HRTFs) elle reste à ce jour la seule approche assurant une reconstruction exacte et dynamique des indices responsables de la localisation auditive. Elle s’impose comme un outil de référence pour la recherche expérimentale liée à la cognition spatiale en contexte multisensoriel et pour les applications de réalité virtuelle.

Ces techniques de spatialisation audio 3D, associées aux dispositifs de captation des mouvements de l’interprète ou de l’auditeur dans l’espace, constituent une base organologique essentielle pour aborder les questions « d’interaction musicale, sonore et multimédia ». Parallèlement, elles nourrissent une recherche sur les mécanismes cognitifs liès à la sensation d’espace, notamment sur la nécessaire coordination entre les différentes modalités sensorielles (audition, vision, proprioception, motricité, ...) pour la perception et la représentation de l’espace. Nous tentons de mettre à jour l’influence des différents indices acoustiques (localisation, distance, réverbération...) utilisés par le système nerveux central de l’homme sur l’intégration des informations sensorielles et leur interaction avec les processus émotionnels.

Sur le plan musical, notre ambition est de fournir des modèles et des outils permettant aux compositeurs d’intégrer la mise en espace des sons depuis le stade de la composition jusqu’à la situation de concert, contribuant ainsi à élever la spatialisation au statut de paramètre d’écriture musicale. Plus généralement, dans le domaine artistique, ces recherches s’appliquent également à la postproduction, aux installations sonores interactives et à la danse à travers les enjeux de l’interaction son/espace/corps.

Principales thématiques

- Spatialisation sonore: réverbération hybride et réponses impulsionnelles spatialisées (SRIR), analyse-synthèse de SRIR, réverbération hybride et réponses impulsionnelles spatialisées, synthèse de champs sonores par réseaux à haute densité spatiale, système WFS et HOA de l’Espace de projection, écoute binaurale, CONTINUUM, spatialisation distribuée, réalité augmentée

- Fondements cognitifs : Intégration multisensorielle et émotion, musique et plasticité cérébrale, perception de la distance en réalité augmentée

- Création / Médiation : Auralisation de salle, composition urbaine et paysagère, étude acoustique du temple de Dendara, synthèse de la directivité par corpus

Studio 1 © Laurent Ardhuin, UPMC

Studio 1 © Laurent Ardhuin, UPMC Studio 1 © Philippe Barbosa

Studio 1 © Philippe Barbosa Studio 1 © Philippe Barbosa

Studio 1 © Philippe Barbosa © Philippe Barbosa

© Philippe Barbosa Projet VERVE © Cyril Fresillon, CNRS

Projet VERVE © Cyril Fresillon, CNRS Projet VERVE © Cyril Fresillon, CNRS

Projet VERVE © Cyril Fresillon, CNRS Studio 1 © Philippe Barbosa

Studio 1 © Philippe Barbosa L'Espace de projection équipé de la WFS © Philippe Migeat

L'Espace de projection équipé de la WFS © Philippe Migeat L'Espace de projection équipé de la WFS © Philippe Migeat

L'Espace de projection équipé de la WFS © Philippe Migeat

Collaborations

ARI-ÖAW (Autriche), Bayerischer Rundfunk (Allemagne), BBC (Royaume-Unie), B<>COM (France), Ben Gurion University (Israël), Conservatoire national supérieur de musique et de danse de Paris (France), CNES (France), elehantcandy (Pays-Bas), France Télévisions (France), Fraunhofer ISS (Allemagne), Hôpital de la Salpêtrière (France), HEGP (France), Hôpital universitaire de Zürich (Allemagne), IRBA (France), IRT (Allemagne), L-Acoustics (France), Joanneum Research (Autriche), LAM (France), McGill University (Canada), Orange-Labs (France), RWTH (Allemagne), Radio France (France), RPI (États-Unis)

Domaines de recherche et projets associés

Projets nationaux et européens

Continuum

Le spectacle vivant augmenté dans ses dimensions sonores

DAFNE+

Decentralized platform for fair creative content distribution empowering creators and communities through new digital distribution models based on digital tokens

HAIKUS

Réalité augmentée et apprentissage artificiel

RASPUTIN

Simulation de l'acoustique architecturale pour une meilleure compréhension spatiale en utilisant navigation immersive temps réel

The Island

The Island

Logiciels (Conception & développement)

SPAT Revolution

Spat~

ToscA

Panoramix

ADMix Tools

Équipe

Responsable d'équipe : Olivier Warusfel

Chercheur.e.s & ingénieur.e.s : Markus Noisternig, Thibaut Carpentier, Valentin BAUER, Benoît Alary, Isabelle Viaud-Delmon

Ingénieure : Coralie Vincent

Doctorant.e.s : Anthony GALLIEN, Alice PAIN

Stagiaire : Paulin ROMAN