Interaction son musique mouvement

Nos travaux concernent toute la chaîne du processus interactif, comprenant la captation et l’analyse des gestes et sons, les outils de gestion de l’interaction et de la synchronisation, ainsi que des techniques de synthèse et traitement sonore temps réel. Ces recherches, et leurs développements informatiques associés, sont généralement réalisés dans le cadre de projets interdisciplinaires, intégrant scientifiques, artistes, pédagogues, designers et trouvent des applications dans des projets de création artistique, de pédagogie musicale, d'apprentissage du mouvement, ou encore dans des domaines médicaux tels que la rééducation guidée par le son et la musique.

Principales thématiques

- Modélisation des gestes et des mouvements: ce thème regroupe le développement de modèles basés sur des études expérimentales, concernant généralement l’interaction entre son et mouvement, allant du jeu d’instrumentistes aux mouvements dansés.

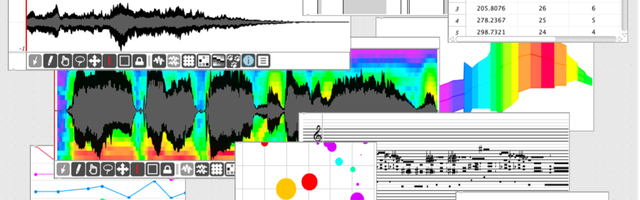

- Synthèse et traitement sonore interactif : ce thème regroupe essentiellement des méthodes de synthèse et traitement sonore basées sur des sons enregistrés ou de large corpus sonores.

- Systèmes interactifs sonores fondés sur le geste et nouveaux instruments : ce thème concerne le design et le développement d’environnements sonores interactifs utilisant gestes, mouvements et toucher. L’apprentissage machine interactif est l’un des outils développés spécifiquement.

- Interaction musicale collective et systèmes distribués: ce thème aborde les questions d’interactions musicales allant de quelques utilisateurs à des centaines. Il concerne plus particulièrement le développement d’un environnement Web mêlant ordinateurs, smartphones et/ou systèmes embarqués, pour explorer de nouvelles possibilités d’interactions expressives et synchronisées.

Domaines de compétence

Systèmes interactifs sonores, interaction humain-machine, captation du mouvement, modélisation du son et du geste, analyse et synthèse sonore temps réel, modélisation statistique et apprentissage machine intéractif, traitement de signal, systèmes interactifs distribués.

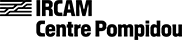

R-IoT : Carte de captation gestuelle à 9 degrés de liberté avec transmission sans fil © Philippe Barbosa

R-IoT : Carte de captation gestuelle à 9 degrés de liberté avec transmission sans fil © Philippe Barbosa Raquettes de Tennis connectées © Philippe Barbosa

Raquettes de Tennis connectées © Philippe Barbosa MO - Modular Musical Objects © NoDesign.net

MO - Modular Musical Objects © NoDesign.net Projet CoSiMa © Philippe Barbosa

Projet CoSiMa © Philippe Barbosa Installation Siggraph, 2014 © DR

Installation Siggraph, 2014 © DR

Collaborations

Atelier des feuillantines, BEK (Norvège), CNMAT Berkeley (États-Unis), Cycling’74 (États-Unis), ENSAD, ENSCI, GRAME, HKU (Pays-Bas), Hôpital Pitié-Salpêtrière, ICK Amsterdam (Pays-Bas), IEM (Autriche), ISIR-CNRS Sorbonne Université, Little Heart Movement, Mogees (Royaume-Uni/Italie), No Design, Motion Bank (Allemagne), LPP-CNRS université Paris-Descartes, université Pompeu Fabra (Espagne), UserStudio, CRI-Paris université Paris-Descartes, Goldsmiths University of London (Royaume-Uni), université de Genève (Suisse), LIMSI-CNRS université Paris-Sud, LRI-CNRS université Paris-Sud, Orbe.mobi, Plux (Portugal), ReacTable Systems (Espagne), UCL (Royaume-Uni), Univers Sons/Ultimate Sound bank, Universidad Carlos III Madrid (Espagne), université de Gênes (Italie), université McGill (Canada), ZhDK (Suisse).

Domaines de recherche et projets associés

Instruments augmentés

Instruments acoustiques auxquels sont intégrés des capteurs

Synthèse concaténative par corpus

Base de données de sons enregistrés, segmentés et indexés par des descripteurs sonores

Projets nationaux et européens

OpenTuning

Individuation créative par le design d'interaction avec des systèmes musicaux génératifs

Aqua-Rius

Analyse de la qualité audio pour représenter, indexer et unifier les signaux

DAFNE+

Decentralized platform for fair creative content distribution empowering creators and communities through new digital distribution models based on digital tokens

DOTS

Objets musicaux distribués pour les interactions collectives

Element

Stimuler l'apprentissage de mouvements dans les interactions humain-machine

Inside Artificial Improvisation

Dans la boîte noire de l’improvisation artificielle

MICA

Improvisation Musicale et Action Collective

OpenTuning

Individuation créative par le design d'interaction avec des systèmes musicaux génératifs

Logiciels (Conception & développement)

MuBu

Suivi de geste et de formes temporelles

CataRT

Équipe

Responsable d'équipe : Frederic Bevilacqua

Chercheur.e.s & ingénieur.e.s : Jerome Nika, Benjamin Matuszewski, Diemo Schwarz, Riccardo Borghesi

Ingénieure : Coralie Vincent

Doctorant.e.s : Ulysse ROUSSEL, Léo MERCIER, Louise GREBEL