Composition assistée par ordinateur : écriture du son, du temps et de l'espace

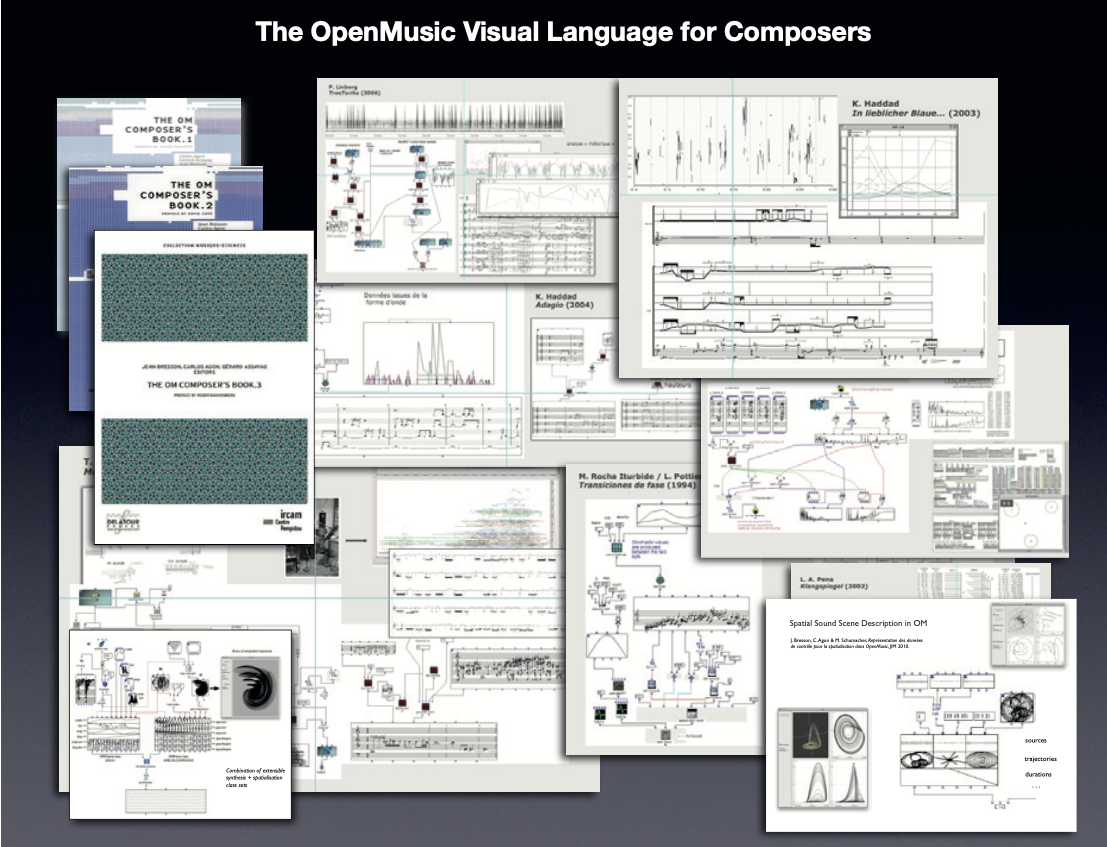

La recherche en composition assistée par ordinateur (CAO) a pour but d’étudier et concevoir des modèles et techniques informatiques adaptés aux processus de création, intégrant paradigmes de calcul, interactions et représentations musicales. Cette démarche met en avant une orientation symbolique s’appuyant sur les langages de programmation pour la création et le traitement des données harmoniques, temporelles, rythmiques ou des autres aspects entrant en jeu dans les processus compositionnels. Nos travaux dans ce domaine s’articulent principalement autour de l’environnement OpenMusic, un langage de programmation visuelle basé sur Common Lisp et dédié à la composition musicale. Cet environnement utilisé par les compositeurs de musique contemporaine depuis une quinzaine d’années, est aujourd’hui considéré comme l’une des principales références dans le domaine de la composition assistée par ordinateur et il a fait l’objet de plusieurs dizaines de milliers de téléchargements par des utilisateurs de tous les pays.

OpenMusic (OM) est un environnement de programmation visuelle pour la composition ou l’analyse musicale assistées par ordinateur. OM offre à l’utilisateur de nombreux modules associés à des fonctions, connectés les uns aux autres pour constituer un programme (ou patch) permettant de générer ou transformer des structures et données musicales. OM propose également de nombreux éditeurs permettant de manipuler ces données, ainsi que des bibliothèques spécialisées dans des domaines tels que l'analyse et la synthèse sonore, les modèles mathématiques, la résolution des problèmes de contraintes, etc. Des interfaces originales comme l'éditeur de maquettes permettent de construire des structures intégrant relations fonctionnelles et temporelles entre les objets musicaux. OpenMusic est utilisé par un grand nombre de compositeurs et de musicologues, et est enseigné dans les principaux centres d’informatique musicale ainsi que dans plusieurs universités en Europe et dans le monde.

Récemment, Un nouveau paradigme de calcul et de programmation a été proposé au sein de l'environnement OpenMusic, combinant l’approche existante basée sur le style fonctionnel/ demand-driven à une approche réactive inspirée des systèmes interactifs temps réel (event-driven). L’activation de chaînes réactives dans les programmes visuels accroît les possibilités d’interaction dans l’environnement de CAO : un changement ou une action de l'utilisateur (événement) dans un programme ou dans les données qui le composent, produit une série de réactions conduisant à sa mise à jour (réévaluation). Un événement peut également provenir d'une source extérieure (typiquement, un port MIDI ou UDP ouvert et attaché à un élément du programme visuel) ; ainsi, une communication bidirectionnelle peut être établie entre les programmes visuels et des applications ou dispositifs externes.

L'environnement de CAO se trouve alors inséré dans la temporalité d'un système plus large, et potentiellement régi par les évènements et interactions produits par ou dans ce système. Cette temporalité peut être celle du processus de composition, ou celle de la performance. Ce projet a produit le logiciel OpenSource OM#, qui est désormais développé indépendamment de l’Ircam.

Les technologies d’analyse, traitement et synthèse du signal sonore permettent d’entrevoir des modalités d’écriture inédites assimilant la création sonore au cœur de la composition musicale. OpenMusic permet l’intégration de telles technologies via un ensemble de bibliothèques spécialisées liant les programmes créés dans l’environnement de CAO aux processus de traitement, de synthèse ou de spatialisation sonore (réalisés notamment par des outils de l’Ircam: SuperVP, Pm2, Chant, Modalys, Spat~, mais également des outils externes comme Csound ou Faust). Ce rapprochement des domaines du son et de la CAO constitue une approche nouvelle pour la représentation et le traitement sonore à travers des programmes et structures de données symboliques de haut niveau.

Développée en collaboration avec le compositeur Marco Stroppa, la bibliothèque OMChroma permet de contrôler des processus de synthèse sonore à l’aide de structures de données matricielles.

Son extension au domaine de la spatialisation, OMPrisma, permet de réaliser des processus de «synthèse sonore spatialisée», faisant intervenir la spatialisation (positions et trajectoires, mais également caractéristiques de salle, orientation ou directivité des sources sonores) au moment de la production des sons. Contrôlés dans OpenMusic grâce à un ensemble d’éditeurs graphiques et d’opérateurs, ces outils offrent une grande richesse dans la spécification conjointe des sons de synthèse et des scènes spatiales. Le projet OM-Chant a récemment permis de remettre sur le devant de la scène la technologie de synthèse par FOFs (fonctions d’ondes formantiques), et de réaliser au cœur des processus de CAO des sons de synthèse inspirés du modèle de production vocale.

Équipe Ircam : Représentations musicales