Jazz Ex Machina

Le 11 février dernier, dans le cadre du festival Présences, Steve Lehman et Frédéric Maurin, en compagnie de l’Orchestre National de Jazz, présenteront Ex Machina, une création singulière composée à l’aide de, et faisant intervenir en temps réel, l’environnement Dicy2 développé par Jérôme Nika et l’équipe Représentations Musicales de l’Ircam. Les deux musiciens lèvent le voile sur leur processus de création.

De gauche à droite : Steve Lehman et Frédéric Maurin à l'Ircam © Ircam - Centre Pompidou

De gauche à droite : Steve Lehman et Frédéric Maurin à l'Ircam © Ircam - Centre Pompidou

Quelles ont été vos précédentes expériences d’interaction avec les outils informatiques et qu’en avez-vous retiré ?

Frédéric Maurin : Je me suis formé à l’Ircam au logiciel Max voilà quelques années, et je l’ai utilisé sur de nombreuses compositions dans le cadre mon travail avec Ping Machine, orchestre que je dirigeais avant l’ONJ. Mais il n’y avait, dans ce cadre-là, nulle notion d’interaction et de « prise de décision » de la part de la machine : tout était contrôlé. La conclusion que j’en ai tirée, c’est que j’avais besoin de spécialistes de ces technologies pour pouvoir aller plus loin.

Steve Lehman : Je suis familier d’un grand nombre d’environnements logiciels, dont Max, que j’ai fréquemment utilisé ainsi qu’Orchidea pour composer la musique d’Ex Machina. Je me frotte aussi à des formes d’improvisation assistée par ordinateur depuis 2005 au moins – d’abord sous la tutelle de George Lewis en tant que doctorant en composition à l’université Columbia de New York et, plus tard, en collaboration avec des personnalités telles que David Wessell et Gérard Assayag, à l’Ircam.  Mais j’ai aussi travaillé sur Dicy2 – en étroite collaboration avec le chercheur Jérôme Nika – depuis 2016. Je suis donc plus ou moins au courant de l’état de l’art et de son potentiel. Le processus de travail avec ces nouvelles ressources technologiques est bien souvent assez lent et incrémentiel. Mais, globalement, je suis très heureux des progrès que Jérôme et moi-même avons faits dans le développement de concepts de plus en plus nuancés et définis s’agissant des esthétiques et des philosophies sous-jacentes qui ont orienté notre travail sur l’interaction humain/machine et l’improvisation musicale. Travailler avec Dicy2 m’a ouvert un nouvel éventail de modes d’écoute. Et, en conséquence, de mode de composition et de composition en temps réel – que l’on appelle aussi « improvisation ».

Mais j’ai aussi travaillé sur Dicy2 – en étroite collaboration avec le chercheur Jérôme Nika – depuis 2016. Je suis donc plus ou moins au courant de l’état de l’art et de son potentiel. Le processus de travail avec ces nouvelles ressources technologiques est bien souvent assez lent et incrémentiel. Mais, globalement, je suis très heureux des progrès que Jérôme et moi-même avons faits dans le développement de concepts de plus en plus nuancés et définis s’agissant des esthétiques et des philosophies sous-jacentes qui ont orienté notre travail sur l’interaction humain/machine et l’improvisation musicale. Travailler avec Dicy2 m’a ouvert un nouvel éventail de modes d’écoute. Et, en conséquence, de mode de composition et de composition en temps réel – que l’on appelle aussi « improvisation ».

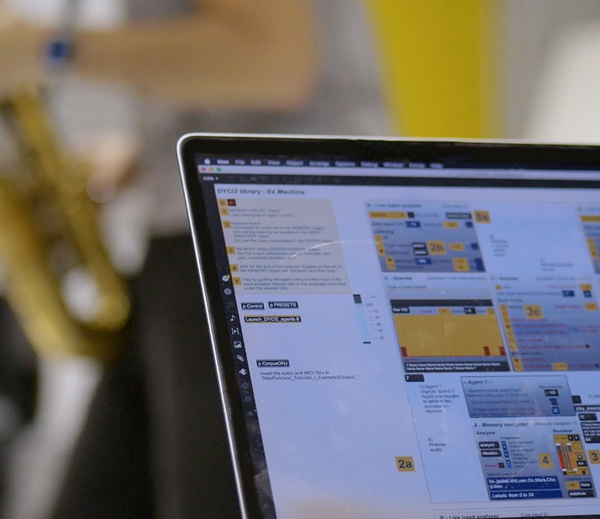

Photo : Logiciel Dicy2, session de travail en studio à l'Ircam, capture écran du film Images d'une œuvre n°29 © Ircam - Centre Pompidou

Vous êtes-vous intéressés à la manière dont la machine fonctionne ?

F.M. : Pour moi, il est essentiel d’essayer de comprendre le fonctionnement de la machine. Sans cela, on ne peut pas lui fournir le bon matériel nécessaire à son apprentissage. Et l’on risque de se perdre dans la composition. Cela reviendrait à écrire pour un instrument sans connaître son fonctionnement : on risque de faire n’importe quoi !

Par ailleurs, comprendre son fonctionnement peut aider à cadrer ce que l’on peut espérer en obtenir et donc à développer un processus d’écriture cohérent. C’est un apprentissage très empirique, avec de nombreux ratés – pour moi en tout cas.

Qu’est-ce que la machine vous permet de faire, que vous ne pourriez faire autrement ?

S.L. : Elle permet de calculer et/ou d’ordonner à une vitesse incroyable. En termes musicaux, cela se traduit souvent par une analyse quasi instantanée de sonorités d’une complexité extrême et/ou en la proposition de centaines de solutions potentielles pour un problème musical particulier, en un clic. L’ordinateur est aussi un outil qui apporte une objectivité et des biais singuliers, bien différents des miens. Et rien que cela est souvent très utile lorsqu’on évalue un matériau musical, lorsqu’on le compare à un autre et que l’on cherche des liens entre eux.

F.M. : Ses capacités d’analyse et de calcul en temps réel nous permettent notamment de suivre des paramètres qu’un humain ne pourrait pas suivre. De même, l’ordinateur peut utiliser, pour générer ses réponses, des mémoires sonores très complexes et totalement composites, ce qui provoque des réponses qu’un humain ne pourrait pas imaginer.

Les propositions et réactions de la machine vous ont-elles surpris ?

F.M. : Oui et heureusement. Je pense que c’est une très grande part de ce que l’on recherche. Si l’on ne souhaite pas être surpris, autant écrire toute la musique !

Cependant, la difficulté du processus que nous mettons en place est justement d’avoir un comportement cohérent, d’une fois sur l’autre, sans pour autant nous priver de l’élément de surprise, d’où l’importance des réglages fins que Jérôme réalise sur la machine. Parfois, la surprise est que rien ne marche parce que, soit les réglages ne sont pas les bons, soit on n’a pas la bonne matière à donner à la machine. Dans la plupart des cas, ça va dans le sens que l’on avait imaginé, mais pas exactement non plus, et on se retrouve un peu ailleurs.

Évidemment dans le cas des interactions avec un soliste, le soliste a aussi une part de responsabilité dans ce processus. De ce point de vue, on cherche ce que l’on cherche dans toute forme d’improvisation : la spontanéité, et un caractère unique du moment musical.

S.L. : La notion de surprise est centrale s’agissant de toute entreprise autour des interactions humain/ordinateur. Pour faire court, la réponse est oui, j’ai été très souvent surpris par la variété des discours proposés par l’ordinateur – les liens qu’il établissait entre deux objets sonores apparemment disparates, par exemple. C’est un équilibre incroyablement délicat qu’il faut veiller à maintenir, ce qui implique une prise de décision s’appuyant sur son sens esthétique et ses goûts musicaux propres. Dans le même temps, il faut savoir lâcher la bride à l’ordinateur pour qu’il puisse nous surprendre, lui donner l’espace de nous aider à découvrir quelque chose de neuf sur notre propre personnalité musicale et son potentiel. Le potentiel de surprise et de découverte de Dicy2 est phénoménal ! Parfois, ce sont des surprises très agréables. Parfois, pas tant que ça. Mais après plusieurs années de travail avec Jérôme Nika sur Dicy2, j’ai le sentiment que nous avons de plus en plus de succès pour dénicher les bonnes surprises.

S.L. : La notion de surprise est centrale s’agissant de toute entreprise autour des interactions humain/ordinateur. Pour faire court, la réponse est oui, j’ai été très souvent surpris par la variété des discours proposés par l’ordinateur – les liens qu’il établissait entre deux objets sonores apparemment disparates, par exemple. C’est un équilibre incroyablement délicat qu’il faut veiller à maintenir, ce qui implique une prise de décision s’appuyant sur son sens esthétique et ses goûts musicaux propres. Dans le même temps, il faut savoir lâcher la bride à l’ordinateur pour qu’il puisse nous surprendre, lui donner l’espace de nous aider à découvrir quelque chose de neuf sur notre propre personnalité musicale et son potentiel. Le potentiel de surprise et de découverte de Dicy2 est phénoménal ! Parfois, ce sont des surprises très agréables. Parfois, pas tant que ça. Mais après plusieurs années de travail avec Jérôme Nika sur Dicy2, j’ai le sentiment que nous avons de plus en plus de succès pour dénicher les bonnes surprises.

Photo : Steve Lehman en studio à l'Ircam, capture écran du film Images d'une œuvre n°29 © Ircam - Centre Pompidou

Au cours de la performance, avez-vous eu le sentiment d’interagir véritablement avec une machine ? Quelles différences avec l’improvisation avec un musicien « humain » ?

S.L. : C’est une bonne question. Et, en même temps, si on veut improviser avec un autre musicien humain, on peut le faire facilement. À bien des égards, cela nous renvoie à la dualité plus ou moins obsolète homme/machine. Bien sûr, on peut apprendre quantité de choses sur la nature de l’improvisation musicale en étudiant et modélisant le comportement de la machine d’après des esthétiques musicales hautement nuancées et en s’inspirant de la nature des cognition et perception humaines. Mais, au point où nous en sommes, je peux affirmer sans crainte que l’improvisation guidée par ordinateur peut nous en apprendre beaucoup sur la nature de l’improvisation musicale, que l’improvisation entre humains ne saurait nous apprendre. À commencer par le fait que l’ordinateur n’a pas de sentiment ! Il peut jouer tout ce que je lui demande, sans que j’aie à me soucier si ça lui plaît, ou si je le vexe, parce que je joue plus vite qu’il ne le peut, ou parce que je maîtrise des connaissances qu’il n’a pas. C’est donc là, déjà, un bon point de départ.

F.M. : Je suis assez d’accord avec Steve : l’intérêt de travailler avec la machine c’est justement qu’elle va avoir des comportements inhumains et des capacités surhumaines.

Concert de l'Orchestre National de Jazz, Ex Machina, répétitions à la Maison de la radio, capture écran du film Images d'une œuvre n°29 © Ircam - Centre Pompidou

Concert de l'Orchestre National de Jazz, Ex Machina, répétitions à la Maison de la radio, capture écran du film Images d'une œuvre n°29 © Ircam - Centre Pompidou

Y a-t-il des aspects de la machine que vous aimeriez voir développés ?

S.L. : Bien sûr. Je crois d’ailleurs qu’ajouter de plus en plus de fonctions ultra-sophistiquées en temps réel à Dicy2 est un but commun à beaucoup de gens. Et l’environnement a déjà beaucoup progressé à cet égard depuis mes premiers essais en 2016. Les domaines du rythme et de l’intelligence rythmique présentent l’un des potentiels les plus riches et les plus vastes d’amélioration. La nature de la perception du rythme et l’entraînement rythmique sophistiqué sont incroyablement complexes à modéliser dans l’espace informatique.

F.M. : Aujourd’hui, sans un « clic » pour lui donner le tempo, l’ordinateur ne sait pas suivre un vrai batteur en temps réel, ce qui soulève des problèmes techniques importants pour l’analyse du discours, a fortiori en temps réel. De surcroît, le rythme c’est aussi le temps, la machine a donc du mal à anticiper la suite. Or, d’une part, la batterie et son langage rythmique sont au cœur de l’évolution des musiques que nous pratiquons et, d’autre part, dans l’improvisation, l’interaction entre le soliste et la batterie est un élément central impliquant de nombreuses subtilités rythmiques, une grande rigueur dans le tempo mais aussi, parfois, beaucoup de liberté.

Par ailleurs, la question de la limite de calcul est toujours un enjeu : sur un programme comme le nôtre, avec environ une heure et quart de musique, on commence à flirter avec les limites de la machine. C’est comme pour l’écriture d’orchestre, dites à un compositeur qu’il dispose de deux cors, il en voudra trois et quand il y en aura trois, il en voudra quatre ! Il nous reste donc encore du chemin à parcourir – tant mieux !

Propos recueillis par Jérémie Szpirglas

Écouter le concert retransmis sur France musique

![]()

Cette action utilise les recherches et logiciels du projet REACH de l’équipe Représentations musicales de l’Ircam dirigé par Gérard Assayag.