Au sein de l’équipe Analyse et synthèse des sons, Judith Deschamps bénéficie, pour recréer une voix de castrat vraisemblable, des talents conjugués de deux chercheurs : le directeur de recherche Axel Roebel et le doctorant Frederik Bous. Petit aperçu de leurs travaux en cours.

Voilà près de 30 ans, trois membres de l’équipe Analyse/Synthèse, Philippe Depalle, Guillermo Garcia, Xavier Rodet, avaient déjà réussi l’exploit de créer un « Castrat Virtuel » pour le film Farinellide Gérard Corbiau, ce qu’ils ont décrit dans un article très détaillé. Ils avaient enregistré deux chanteurs (avec accompagnement orchestral), un contreténor et une soprano colorature. Le principe de la synthèse était somme toute assez simple, mais très laborieux : chaque voix correspondant à un registre, il s’agissait surtout de garder un timbre cohérent d’un bout à l’autre de la tessiture du castrat, particulièrement dans le medium, lorsqu’on passe de l’une à l’autre. Ils ont donc amassé pour chaque voix une vaste base de données de notes, chantées par les deux chanteurs, et couvrant toutes les voyelles et trois niveaux d’intensité, qu’ils ont analysées pour dégager les caractéristiques spectrales correspondant au timbre. Considérant que le timbre du castrat était vraisemblablement plus proche de celui du contreténor, ils ont « adapté » les notes qui, trop hautes pour lui, devaient être chantées par la soprano, en corrigeant manuellement leurs timbres.

Voilà près de 30 ans, trois membres de l’équipe Analyse/Synthèse, Philippe Depalle, Guillermo Garcia, Xavier Rodet, avaient déjà réussi l’exploit de créer un « Castrat Virtuel » pour le film Farinellide Gérard Corbiau, ce qu’ils ont décrit dans un article très détaillé. Ils avaient enregistré deux chanteurs (avec accompagnement orchestral), un contreténor et une soprano colorature. Le principe de la synthèse était somme toute assez simple, mais très laborieux : chaque voix correspondant à un registre, il s’agissait surtout de garder un timbre cohérent d’un bout à l’autre de la tessiture du castrat, particulièrement dans le medium, lorsqu’on passe de l’une à l’autre. Ils ont donc amassé pour chaque voix une vaste base de données de notes, chantées par les deux chanteurs, et couvrant toutes les voyelles et trois niveaux d’intensité, qu’ils ont analysées pour dégager les caractéristiques spectrales correspondant au timbre. Considérant que le timbre du castrat était vraisemblablement plus proche de celui du contreténor, ils ont « adapté » les notes qui, trop hautes pour lui, devaient être chantées par la soprano, en corrigeant manuellement leurs timbres.

« Quand on sait comment ils ont procédé, avoue Axel Roebel, le résultat n’en est que plus impressionnant ! Le seul problème véritable qu’ils ont constaté avec ce dispositif, outre sa fastidiosité, c’était le sentiment d’entendre parfois des discontinuités dynamiques dans le son. »

Dans ce nouveau projet, le but est cette fois d’« augmenter » une voix en l’« hybridant ». Pour les passages qui entrent dans sa tessiture propre, cette voix est utilisée telle quelle. Pour les hauteurs qui sortent de sa tessiture, l’idée est d’enregistrer les passages transposés à une hauteur chantable, puis de les transposer et de la transformer en empruntant le timbre d’autres voix. Six voix ont donc été enregistrées chantant l’air « Quell’ usignolo che innamorato » de Geminiano Giacomelli (1662-1740), que Judith Deschamps veut reconstituer : deux voix d’enfant, une soprano, une alto, un contreténor et un ténor léger. Parmi ces six voix, on a retenu celle de l’alto, les autres servant à combler ses lacunes en hauteurs, grâce à des réseaux de neurones ou systèmes apprenants mis au point par Axel Roebel et le doctorant Frederik Bous.

« Le principe de base, dit Axel Roebel, c’est de faire apprendre à un modèle profond à reconstituer le timbre d’un chanteur à partir d’un signal donné et une hauteur cible, ce qui permettra alors de transposer les passages que la voix sélectionnée ne peut atteindre. » L’idée parait évidente, sa réalisation est bien plus complexe.

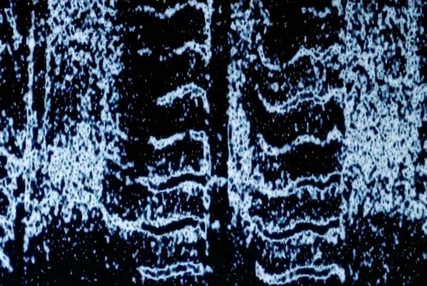

Pour communiquer aux réseaux les propriétés d’une voix donnée, les chercheurs utilisent une représentation de cette voix, condensée et échantillonnée dans le temps sous forme d’image, appelée « spectrogramme en échelle Mel » ou « Mel-spectrogramme ». Le Mel-spectrogramme est plus facile à manipuler pour les réseaux de neurones car de nombreux détails du spectre, qui ne sont pas pertinents pour nos perceptions, en ont été supprimés.

Judith Deschamps dans les studios de l'ircam

Première étape : « À partir des enregistrements vocaux, un réseau de neurones a été entraîné pour recréer un son à partir de son Mel-spectrogramme, explique Axel Roebel. En confrontant le résultat à son modèle, on mesure la “perte” de qualité liée au processus, laquelle perte permet alors au réseau de neurones de s’améliorer de lui-même. »

Ensuite, ce principe a été reproduit, mais, avec un duo de réseaux baptisé « Autoencoder ». Le but du premier réseau de neurones est de produire non pas un Mel-spectrogramme « complet », mais une forme réduite de Mel-spectrogramme qui ne représente que le contenu spectral ne concernant pas la fréquence fondamentale du son, c’est-à-dire la hauteur chantée. On dit qu’il « désemmêle » la hauteur du reste des informations du Mel-spectrogramme. Là encore, ça paraît évident, mais pas tant que ça en réalité, puisque le timbre d’une voix dépend (aussi) de la hauteur chantée ! On obtient alors ce que Frederik Bous appelle le « code résiduel » du son, qui « code » le timbre, le phonème, le vibrato, etc.

Ce « code résiduel » (ou plutôt ces codes résiduels, car le processus est reproduit pour tous les sons de la base de données) sert à entraîner le deuxième réseau de neurones : le travail de celui-ci est de reconstituer, à partir de ce code résiduel, et d’une fréquence donnée, un Mel-Spectrogramme complet.

La première phase de l’entraînement conjoint de ces deux réseaux se fait en réinjectant dans le second exactement la même fréquence fondamentale que celle de l’échantillon d’origine. Là encore cela permet de confronter le résultat du travail avec le son d’origine, donnant la possibilité aux réseaux d’apprendre de leurs erreurs et de s’améliorer eux-mêmes (d’une part pour affiner la production du code résiduel, et d’autre part, pour ajuster la recréation du son).

De par son mode de fonctionnement, l’Autoencoder permet déjà de faire des transpositions (en lui injectant une fréquence fondamentale différente de l’original), même s’il ne l’a encore jamais appris. Seulement, plus on change cette fréquence, plus le système doit modifier le Mel-Spectrogramme à la sortie.

« Seulement, qui dit modifier, dit aussi inventer ! remarque en effet Axel Roebel. Et pour améliorer ces “inventions” du système, on ne peut plus les comparer avec un signal existant – car aucun chanteur n’est capable de chanter la même mélodie transposée dans une autre tessiture. Il nous manque donc une “cible” à donner au système. Pour cela, on utilisera un nouveau réseau, auquel on a appris les voix non retouchées. Charge à ce nouveau réseau, appelé “discriminateur” de “critiquer” les inventions de notre outil de transposition en les comparant à ce qu’il connaît, et de déterminer si elles sont vraisemblables ou non. »

Là encore, les deux réseaux de neurones sont utilisés pour s’entraîner mutuellement : le transpositeur « transpose », le discriminateur « critique », en essayant de deviner si les sons produits sont ou non le résultat d’une transposition par le premier, et, si oui, dans quelle mesure. Afin que le premier produise des sons de plus en plus vraisemblables, et que le deuxième devient de plus en plus pointu dans ses critiques… Ainsi, tout le monde est gagnant.

Cette avant-dernière étape ne fait d’ailleurs que commencer au moment où nous écrivons ces lignes. « L’espoir, dit Axel Roebel, est que le réseau de neurones améliore ainsi la qualité de ses transpositions et limite les pertes, jusqu’à ce que celles-ci soient indétectables. Actuellement, nous y arrivons sur deux octaves. Idéalement, nous aimerions pouvoir atteindre les trois octaves et demie. »

La dernière étape consistera enfin à faire ce qu’on voulait faire dès le début : augmenter la chanteuse en étendant son chant à toutes les hauteurs, de manière cohérente et vraisemblable. « Le principe, explique Frederik Bous, est en réalité le même que celui des « Deepfakes » pour les visages et les vidéos. »

« En procédant ainsi, explique Axel Roebel, on crée une voix hybride sans rupture dans son timbre en fonction des hauteurs. »