« La co-créativité homme-machine va changer radicalement notre expérience musicale »

Alors que les préparatifs battent leur plein, le journaliste grec Thanos Madzanas a rencontré Gérard Assayag, un des membres du comité d’organisation. Chercheur à l’Ircam où il dirige l’équipe Représentations musicales, celui-ci explique les liens nouveaux qui émergent entre musique, improvisation et intelligence artificielle. Qu’entend-on par interactions improvisées et co-créativité homme-machine ? Quid de leurs impacts sur la création musicale et, plus généralement, sur l’expérience humaine ?

Alors que les préparatifs battent leur plein, le journaliste grec Thanos Madzanas a rencontré Gérard Assayag, un des membres du comité d’organisation. Chercheur à l’Ircam où il dirige l’équipe Représentations musicales, celui-ci explique les liens nouveaux qui émergent entre musique, improvisation et intelligence artificielle. Qu’entend-on par interactions improvisées et co-créativité homme-machine ? Quid de leurs impacts sur la création musicale et, plus généralement, sur l’expérience humaine ?

Qu’est-ce qui a motivé l’Ircam à lancer ImproTech ? Était-ce avant tout pour des raisons scientifiques, éducatives, musicales ou les trois à la fois ?

L’Ircam est un lieu unique de recherche et de création musicale et ImproTech apparaît comme la combinaison idéale des deux. Autour de l’idée d’improvisation musicale en interaction avec l’intelligence numérique, la conférence réunit des universitaires, des chercheurs, des musiciens et des créateurs pour créer un lien entre les scènes européennes et mondiales de la recherche et de la création à travers la rencontre symbolique de Paris, où l’événement est né à l’Ircam, et d’une ville emblématique pour son rayonnement culturel.

Comment est choisie la ville avec laquelle vous décidez de collaborer chaque année ? Pourquoi avoir opté pour New York, Philadelphie et maintenant Athènes, première ville européenne à accueillir ImproTech ?

Cela tient aux possibilités de collaboration : l’université de New York, l’université Columbia, l’université de Pennsylvanie, l’université d’Athènes, Onassis STEGI sont autant de grands centres culturels ou éducatifs qui ont manifesté leur intérêt pour notre projet et offert de mettre à disposition leurs infrastructures et leur soutien. Et en effet, nous sommes fiers qu’Athènes soit la première ville européenne à participer à cette série de conférences. Athènes est un phare dans l’histoire de l’humanité et la culture grecque a eu un impact fondamental sur la relation entre l’art et la rationalité.

L’Ircam et le Centre culturel Onassis collaborent depuis longtemps à plusieurs niveaux et font partie du même réseau européen. Qu’est-ce qui vous a alors décidés à choisir le département des Etudes musicales de l’université d’Athènes (UOA) comme autre co-organisateur d’ImproTech à Athènes ?

Il existe également des collaborations de longue date entre l’Ircam et l’UOA, notamment grâce à Anastasia Georgakis, la responsable du laboratoire d’acoustique musicale et d’enregistrement qui a été doctorante à l’Ircam. Cette coopération a donné lieu à des ateliers communs, à des cours, à des échanges d’étudiants et maintenant à ImproTech.

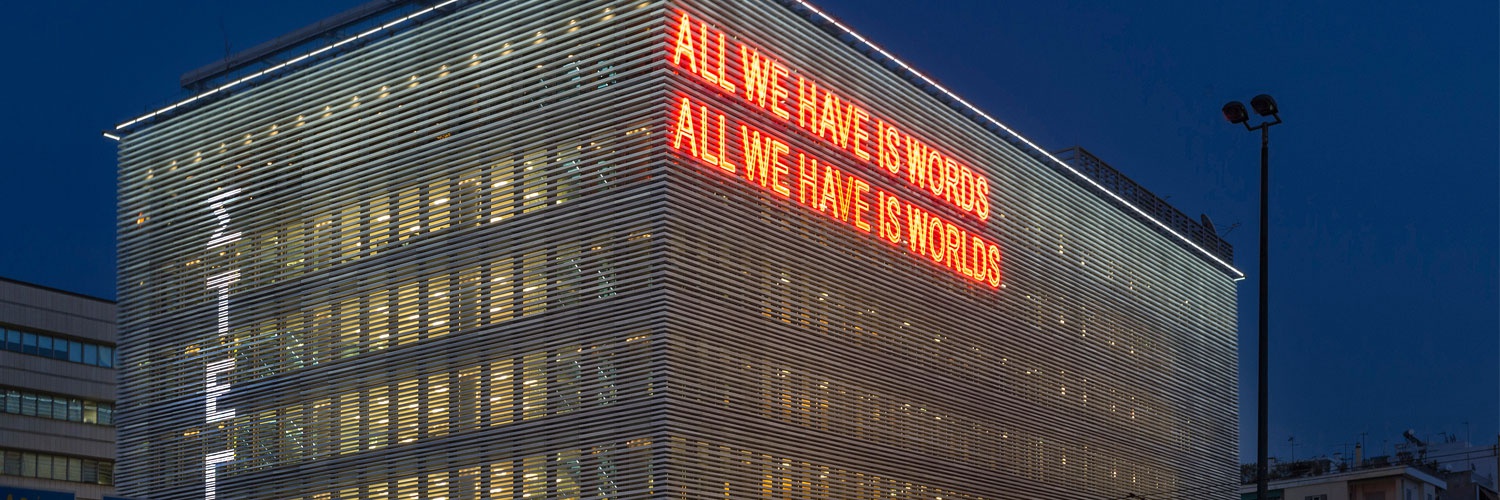

Onassis STEGI, Athènes

Onassis STEGI, Athènes

L’Ircam a toujours été à la pointe de la recherche en générale et plus particulièrement de la recherche technologique portant à la fois sur les formes et le son où la musique peut se déployer. J’en déduis que votre intérêt pour l’intelligence artificielle et vos travaux sur ses possibilités d’application à la musique ne sont pas récents ?

De nombreux projets en lien avec l’IA sont en cours à l’Ircam et le laboratoire STMS (« Sciences et techniques de la musique et du son », unité mixte de recherche entre l’Ircam, le CNRS et Sorbonne Université) a toujours été pionnier en matière de pratiques musicales assistées par ordinateur. Ce qui nous intéresse là, c’est l’intelligence artificielle créative appliquée à la compréhension et à la création musicale. L’IA en musique est d’ailleurs un des thèmes abordés par ImproTech à Athènes avec une série d’interventions intitulée « Algorithmes, IA et improvisation » le vendredi 27 septembre.

Comment et jusqu’à quel point pensez-vous que l’IA peut affecter à la fois le concept et le processus d’improvisation ? Indépendamment de l’intérêt scientifique et peut-être musicologique, y voyez-vous une réelle valeur musicale ? Le processus créatif et la fabrique musicale à proprement parler y gagnent-ils ?

Mon équipe — Représentations musicales à l’Ircam — et ses partenaires à l’EHESS et à l’UCSD, ont développé le concept de co-créativité homme-machine, lequel répond en quelque sorte à cette question. La co-créativité est un phénomène émergent, elle apparaît lorsque se produisent des rétroactions croisées complexes en termes d’apprentissage et de génération entre des agents naturels et artificiels. Et comme c’est un système complexe, ses propriétés ne sont pas réductibles à celles des agents en interaction.

En ce sens, il n’y a plus lieu de s’interroger sur l’épineuse question philosophique portant sur la possibilité réelle ou non d’une créativité artificielle. Car ce qui importe, c’est comment les effets créatifs émergent d’une interaction complexe impliquant aussi l’humain. Et c’est là que l’intelligence artificielle est d’une grande aide car elle fournit des méthodes pour l’écoute artificielle, la découverte de structures, l’apprentissage génératif. Mais il faut aussi que les architectures d’interaction intelligentes soient élaborées de telle sorte qu’elles permettent à la co-créativité de se manifester.

De manière plus générale, pensez-vous que l’intelligence artificielle peut non seulement faciliter et rendre plus efficace, mais également améliorer la création musicale et même l’expérience musicale dans son ensemble, au fur et à mesure que nous la faisons advenir et la percevons en tant qu’êtres humains ? Et si tel est le cas, quelles en sont les modalités et l’ampleur ?

La co-créativité entre l’humain et la machine entraînera l’émergence de répertoires de structures d’information d’un nouveau genre qui auront un impact profond sur le développement humain individuel et collectif. Avec des technologies permettant d’extraire des traits sémantiques de signaux physiques et humains, associées à un apprentissage génératif de représentations de haut niveau, nous commençons à faire la lumière sur la complexité croissante de la coopération, des synergies ou des conflits inhérents aux réseaux cyber-humains. En comprenant, en modélisant et en développant la co-créativité entre l’humain et la machine dans le domaine musical, les interactions improvisées permettront aux musiciens, quel que soit leur niveau de formation, de développer leurs compétences et d’accroître leur potentiel créatif individuel et social.

Utiliser les ordinateurs — comme n’importe quelle autre source sonore électronique — en tant qu’outils est une chose. Mais avec l’IA, les ordinateurs cessent d’être des outils et commencent à devenir à leur tour des créateurs de musique autonomes. Alors que la deuxième décennie du XXIe siècle touche à sa fin, est-ce vraiment là ce à quoi aspire l’humanité ?

Comme je l’ai dit, nous nous situons au-delà du questionnement sur la créativité artificielle, avec un concept beaucoup plus puissant et humain, celui de co-créativité. Rien n’a de sens sans l’humain, et nous ne sommes donc pas dans une sorte de rêve (ou de cauchemar) transhumaniste.

Pour vous, une œuvre musicale entièrement créée et réalisée par IA serait-elle exactement la même qu’une œuvre composée par un être humain ? Aurait-elle les mêmes valeurs artistiques et esthétiques et pourrait-elle finalement être aussi bonne (ou mauvaise bien sûr) que celle créée par l’humain ?

Par définition, si c’était la même chose, et bien ce serait pareil [il sourit].

Mais dans l’art en général, il n’y a pas que la réalisation de l’artefact qui compte, il y a aussi la façon dont celui-ci est introduit dans le contexte social et anthropologique par les artistes, ce qui relève de l’impact historique. Une machine pourrait concevoir l’urinoir de Marcel Duchamp, mais serait-elle assez intelligente pour créer et manipuler le contexte provocateur au sein duquel l’œuvre en question a acquis sa signification perturbatrice dans l’histoire de l’art ? Le génie de Duchamp ne s’est pas manifesté dans la simple forme ou matérialité de l’objet.

A titre personnel et en tant que membre de l’équipe de l’Ircam, craignez-vous qu’un jour, les ordinateurs et l’intelligence artificielle remplacent totalement les humains en tant que créateurs de musique ? Pensez-vous que le moment arrivera où un ou plusieurs algorithmes composeront de la musique sans aucune intervention humaine, mais en répondant à une commande ou carrément en suivant les directives très strictes de ceux qui tirent un bénéfice du contrôle de la créativité humaine et même du contrôle des êtres humains ?

C’est parfaitement envisageable pour de la mauvaise musique commerciale aux fins utilitaristes, mais en quoi le résultat serait-il pire que ce à quoi les médias grand public nous exposent aujourd’hui ? Il y aura toujours des créateurs de musique authentique, même s’ils sont minoritaires, et les créateurs n’ont jamais peur d’intégrer de nouvelles technologies.

Quelles sont à vos yeux les interventions scientifiques/éducatives les plus importantes et les plus intéressantes proposées à Athènes par ImproTech et pourquoi ?

Elles sont toutes remarquables !

Mais pour se faire rapidement une idée d’un domaine, je dirais que les keynotes sont des incontournables.

Et pour ce qui est des concerts, quels sont ceux qui vous paraissent les plus intéressants ? Avez-vous hâte d’assister à l’un ou l’autre ?

Les trois concerts seront gratuits, avec chaque soir une incroyable kyrielle d’artistes du monde entier, et un bon équilibre entre jeunes musiciens et têtes d’affiche de renommée mondiale. Ce serait dommage d’en rater ne serait-ce qu’un [il sourit] !

Interview mené par Thanos Madzanas, journaliste, auteur, critique de musique et écrivain grec

Publié en grec dans la section Blogs du HuffPost.gr, dans le cadre d'un cycle sur Athens IMPROTECH ’19.