Synthèse concaténative par corpus

La synthèse concaténative par corpus utilise une base de données de sons enregistrés, segmentés et indexés par leurs caractéristiques sonores, telles que leur hauteur, ou leur timbre. Cette base nommée corpus, est exploitée par un algorithme de sélection d’unités qui choisit les segments du corpus qui conviennent le mieux pour la séquence musicale que l’on souhaite synthétiser par concaténation. La sélection est fondée sur les descripteurs sonores qui caractérisent les enregistrements, obtenus par analyse du signal et correspondant par exemple à la hauteur, l’énergie, la brillance, ou la rugosité.

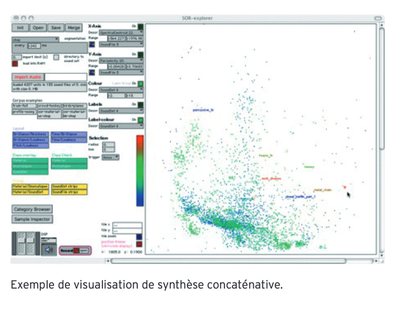

Les méthodes de synthèse musicale habituelles sont fondées sur un modèle du signal sonore, mais il est très difficile d’établir un modèle qui préserverait la totalité des détails et de la finesse du son. En revanche, la synthèse concaténative, qui utilise des enregistrements réels, préserve ces détails. La mise en œuvre de la nouvelle approche de synthèse sonore concaténative par corpus en temps réel permet une exploration interactive d’une base sonore et une composition granulaire ciblée par des caractéristiques sonores précises, et permet aux compositeurs et musiciens d’atteindre de nouvelles sonorités. Si la position cible de la synthèse est obtenue par analyse d’un signal audio en entrée, on parle alors d’audio mosaicing.

La synthèse concaténative par corpus et l’audio mosaicing par similarité spectrale sont réalisés par la bibliothèque MuBu de modules optimisés pour Max, et dans le système CataRT, qui permet, via l’affichage d’une projection 2D de l’espace des descripteurs, une navigation simple avec la souris, par des contrôleurs externes, ou par l’analyse d’un signal audio. CataRT, comme bibliothèque de modules pour Max, comme device pour Ableton Live, ou comme application indépendante, est utilisé dans des contextes musicaux de composition, de performance et d’installation sonore variés.