(DYCI2) Agents créatifs, interactions improvisées et « meta-composition »

L’équipe explore le paradigme de la créativité computationnelle à l’aide de dispositifs inspirés par l’intelligence artificielle, dans le sens des nouvelles interactions symboliques musicien-machine ou dans celui de la science des données et l’extraction des connaissances.

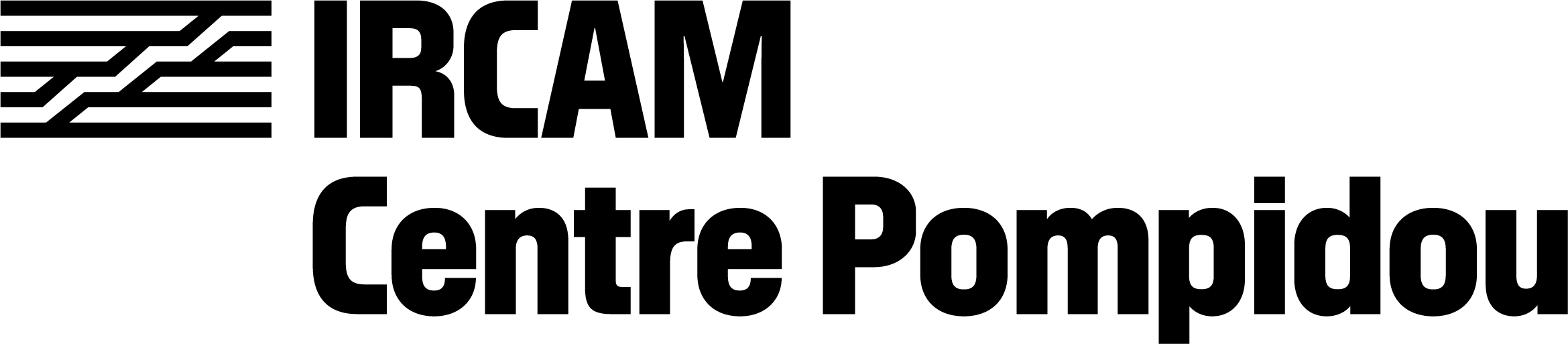

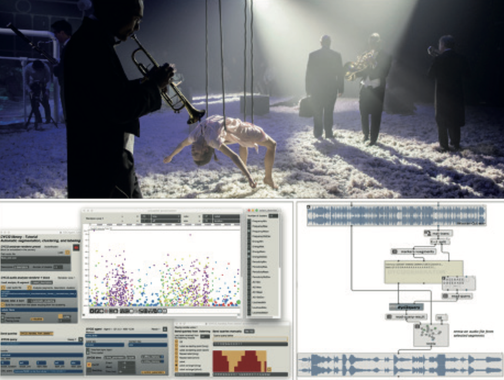

Dans la lignée du logiciel Omax, les recherches sur l’apprentissage et la génération musicale interactive ont abouti à la création de plusieurs paradigmes d’interaction musicien-machine caractérisés par une architecture combinant écoute artificielle du signal, découverte d’un vocabulaire symbolique, apprentissage statistique d’un modèle de séquence et génération de nouvelles séquences musicales par des mécanismes réactifs et/ou de planification (scénario).

Le projet DYCI2 met en avant l’interaction improvisée, à la fois comme modèle anthropologique et cognitif de l’action et de la décision, comme schéma de découverte et d’apprentissage non supervisé, et comme outil discursif pour l’échange humain – artefact numérique, dans une perspective de modélisation du style et de l’interaction.

L’objectif est de constituer des agents créatifs autonomes par apprentissage direct résultant d’une exposition au jeu vivant (live) de musiciens humains improvisant, en créant une boucle de rétroaction stylistique par l’exposition simultanée de l’humain aux productions improvisées des artefacts numériques eux-mêmes, donc à partir d’une situation de communication humain-artefact évoluant dans une dynamique complexe de co-créativité.

Ces agents/instruments s’inscrivent également dans une recherche de nouveaux paradigmes génératifs «informés par IA», en travaillant par exemple à associer aux mécanismes génératifs des modules d’extraction et inférence de grille harmonique en temps réel.

Après avoir été le socle de productions d’envergures permettant une validation par des musiciens experts (Pascal Dusapin, Bernard Lubat, Steve Lehman, Rémi Fox, Hervé Sellin, etc.) ainsi que de workshops et festivals (Festival Improtech Paris-Athina et Paris-Philly; MassMoca, Cycling ’74, etc.) les thématiques DYCI2 se poursuivent avec le lancement du projet ANR MERCI (Mixed Musical Reality with Creative Instruments) coordonné par G. Assayag avec l’EHESS et la startup HyVibe, ainsi que l’ERC Advanced Grant REACH porté par G. Assayag. La mise en place d’environnements homme-machine puissants et réalistes pour l’improvisation nécessite d’aller au-delà de l’ingénierie logicielle des agents créatifs avec des capacités d’écoute et de génération de signaux audio. Cette ligne de recherche propose de renouveler radicalement le paradigme de l’interaction improvisée homme-machine en établissant un continuum allant de la logique musicale co-créative à une forme d’ «interréalité physique» (un schéma de réalité mixte où le monde physique est activement modifié) ancrée dans des instruments acoustiques.

Équipe concernée : Représentations musicales

Image 1 : « Lullaby Experience » – Librairie DYCI2 – Librairie om-dyci2 / Image 2 : Paradigmes d’interaction musicale humain-machine

Image 1 : « Lullaby Experience » – Librairie DYCI2 – Librairie om-dyci2 / Image 2 : Paradigmes d’interaction musicale humain-machine