Une brève chronologie subjective de l’usage de l’intelligence artificielle en composition musicale

Les prémices

On produit de la musique électronique, c’est-à-dire de la musique qui a recours à des instruments de musique électroniques, depuis la fin du XIXe siècle. Mais la production d’un son par un ordinateur exige l’existence desdits ordinateurs, et le plus ancien enregistrement d’une musique générée par un ordinateur est celle produite par la machine développée au laboratoire d’Alan Turing à Manchester en 1951. On peut écouter ci-dessous un enregistrement de cette musique, restauré par Copeland et Long, ainsi que le récit de cette nuit passée à programmer pour donner naissance à cette création, suivi de la réaction de Turing à l’écoute du résultat le matin suivant :

À la fin des années 1940, Alan Turing s’est aperçu qu’il pouvait produire des notes de hauteur variée en modulant le signal contrôlant le haut-parleur relié à son ordinateur, lequel haut-parleur servait alors à signaler la fin d’une série de calculs. Bientôt, Christopher Strachey tire profit de cette petite astuce pour produire ses premières mélodies : l’hymne national (God Saves the King), une petite comptine et In the Mood de Glenn Miller.

À la fin de l’été 1952, Christopher Strachey développe un « jeu de dames complet jouant à une vitesse raisonnable ». Il est aussi responsable des étranges lettres d’amour qui apparaissent sur le tableau d’affichage du département informatique de l’université de Manchester à partir d’août 1953.

La méthode de Strachey pour générer ses lettres d’amour consiste à extrapoler un échantillon en substituant aléatoirement des mots présélectionnés à certains endroits de la phrase. Ces endroits correspondent à des catégories déterminées, et chaque catégorie ouvre à une collection de mots prédéfinis. L’algorithme utilisé par Strachey est le suivant :

"Vous êtes mon" AdjectifNom

2. "Mon" Adjectif (optionnel)NomAdverbe (optionnel)Verbe, "Votre" Adjectif (optionnel)Nom

Générer "Votre" Adverbe, "M.U.C"

Les instructions algorithmiques sont en italique, les positions (espaces réservés aux collections de mots) sont soulignées et les séquences invariantes en sortie sont en gras (l’acronyme M.U.C correspond à : « Manchester’s University Computer »).

C’est en réalité le même processus qui a été utilisé au XVIIIe siècle par les Musikalisches Würfelspiel (« Jeu de dés musical ») pour générer aléatoirement de la musique à partir d’un réservoir d’options pré-composées. L’un des exemples les plus anciens qui nous soient parvenus sont les Der allezeit fertige Menuetten– und Polonaisencomponist (« Menuets et polonaises prêts à composer ») proposés en 1757 par Johann Philipp Kirnberger. En voici un exemple, interprété par le Quatuor Kaiser :

Carl Philipp Emanuel Bach a suivi la même approche en 1758 pour proposer Einfall, einen doppelten Contrapunct in der Octave von sechs Tacten zu machen, ohne die Regeln davon zu wissen (« Principe pour composer un double contrepoint de six mesures à l’octave sans en connaître les règles »). Un autre exemple, peut-être plus fameux, est celui des Musikalisches Würfelspiel K.516f de Wolfgang Amadeus Mozart. En voici le Trio no. 2, proposé par Derek Houl :

À l’époque, on choisit grâce à un jet de dé. En 1957, un ordinateur est utilisé : Lejaren Hiller, en collaboration avec Leonard Isaacson, programme un des premiers ordinateurs, l’ILLIAC à l’université de l’Illinois à Urbana-Champaign, pour produire ce qui est considéré comme la première partition entièrement générée par ordinateur. Intitulée Illiac Suite, c’est devenu plus tard le Quatuor à cordes numéro 4.

La pièce est une œuvre pionnière pour quatuor à cordes, résultat de quatre expériences. Les deux compositeurs, professeurs à l’université, soulignent explicitement l’aspect « recherche » de cette suite, qu’ils considèrent comme une expérience de laboratoire. Les règles et ordres de composition qui définissent les caractéristiques de la musique d’une période donnée, sont transformés en processus algorithmiques automatiques : la première expérience traite de la génération de cantus firmi, la deuxième génère des segments à quatre voix régis par diverses règles, la troisième concerne le rythme, les dynamiques et les indications de jeu, la quatrième explore divers processus stochastiques.

Que ce soit au cours d’un jeu de dé musical ou pour la Illiac Suite, une dialectique se fait jour entre une série de règles régissant la structure et la forme d’une pièce, et l’aléatoire utilisé pour s’assurer d’une certaine variété et de l’exploration de l’immense champ des possibles de la combinatoire. Cette dialectique est à l’œuvre dans quasiment tous les systèmes de composition automatisée.

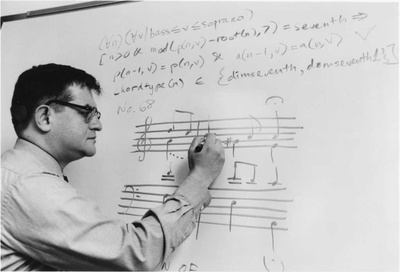

À la même époque, en France, Iannis Xenakis explorait lui aussi de nombreux processus stochastiques pour générer du matériau musical. Il mobilisera d’autres notions mathématiques pour mettre au point de nouveaux processus musicaux génératifs. Dans son livre Musiques formelles (1963), il donne par exemple à voir l’application, à son travail de composition, de la théorie probabiliste (dans ses pièces Pithoprakta et Achorripsis, 1956-1957), de la théorie des ensembles (Herma, 1960-1961) et de la théorie des jeux (Duel, 1959 et Stratégie, 1962).

![]()

Systèmes experts et représentation symbolique des connaissances

Faisons à présent un saut dans le temps jusque dans les années 1980. On assiste alors à l’explosion des systèmes experts. Cet ensemble de techniques suit une approche logique de la représentation et de l’inférence des connaissances. L’idée est d’appliquer aux faits un ensemble de règles prédéfinies afin de produire un raisonnement ou de répondre à une question. Ces systèmes ont été utilisés pour générer des partitions sonores en explicitant des règles qui décrivent une forme musicale ou le style d’un compositeur. Les règles de la fugue, ou l’analyse schenkérienne, par exemple, sont mises à profit pour réaliser des harmonies dans le style de Bach.

Un exemple remarquable de cette approche par règles nous est offert par le travail de Kemal Ebcioglu à la fin des années 1980. Dans sa thèse de doctorat (intitulée « Un système expert pour l’harmonisation des chorales dans le style de J. S. Bach »), il développe le système CHORAL, qui s’appuie sur trois principes :

- l’encodage d’une grande quantité de connaissances sur le style musical considéré

- l’utilisation de contraintes, à la fois pour générer automatiquement des solutions (avec algorithmes de retour-arrière) et pour éliminer celles qui seraient inacceptables (ce qui suppose l’instauration de règles pour évaluer la qualité du résultat)

- l’utilisation d’heuristiques spécifiques au style considéré, afin de hiérarchiser les choix de l’algorithme dans le cas de l’extension d’une composition partiellement existante.

Voici un exemple d’une harmonisation de choral (d’abord l’harmonisation originale de Bach, puis le résultat produit par CHORAL à 4’42). La feuille de salle propose une esquisse du système expert :

Un autre exemple remarquable né au cours de cette même décennie est le système EMI, « Experiment in Music Intelligence » (Expérience dans le domaine de l’intelligence musicale), développé par David Cope à l’université de Santa Cruz. David Cope commence à travailler à ce système alors qu’il est bloqué dans l’écriture d’un opéra :

"J’ai décidé de passer le pas et de travailler avec une forme d’intelligence artificielle que je connaissais pour programmer quelque chose qui produirait de la musique dans mon propre style. L’idée était que je me ferais la remarque "Ah, je n’écrirais jamais un truc pareil !" et que je me sentirais alors obligé d’abandonner l’ordinateur pour aller écrire ce que j’aurais écrit à la place. C’était donc un peu pour me provoquer, quelque chose qui me donnerait l’impulsion d’aller composer."

Le système analyse donc les pièces qui lui sont soumises en tant qu’entrées caractéristiques d’un « style ». Cette analyse est ensuite utilisée pour générer de nouvelles pièces dans le même style. Cependant, l’analyse d’EMI appliquée à ses propres pièces met le compositeur face à ses propres idiosyncrasies, à ses propres emprunts et, finalement, l’oblige à faire évoluer son écriture :

"Je cherchais la signature d’un style "Cope". Et, tout d’un coup, j’entendais du Ligeti, et non du David Cope" […] "exactement comme le dit Stravinsky : "les bons compositeurs empruntent, les géniaux volent". Ce que j’entendais était de l’emprunt, ce n’était pas du vol, alors que je voulais être un voleur véritable, un professionnel. Il fallait donc que je cache tout cela, au moins en partie, et j’ai changé mon style à partir de ce que je pouvais observer par le biais de ce que me renvoyait EMI, et ça, c’est tout simplement génial."+

On peut écouter nombre de pièces produites par ce système. Par exemple, cette Mazurka dans le style de Chopin, produite par EMI, et un intermezzo à la manière de Mahler.

Dès le début, David Cope veut diffuser cette musique via le circuit commercial classique. Les pièces sont bien souvent cosignées avec Emmy, le petit surnom qu’il a donné à son système. Au fil des ans, Emmy a eu deux petites sœurs, Alena et Emily Howel, qui verront également leurs enregistrements largement diffusés.

Quand on demande à David Cope si l’ordinateur fait preuve de créativité, il répond : « Oh, je n’ai aucun doute à ce sujet. Oui, oui, un million de fois oui. La créativité, c’est simple ; la conscience, l’intelligence, c’est ça qui est difficile. »

![]()

Approches numériques versus GOFAI (« Good Old Fashioned Artificial intelligence » ou Bonne vieille intelligence artificielle)

Les versions postérieures d’EMI ont également recours à des techniques d’apprentissage qui ont prospéré au début des années 2000. De fait, tout au long de l’histoire de l’informatique, deux approches ont été concurrence.

Le raisonnement symbolique correspond aux méthodes d’intelligence artificielle s’appuyant sur une représentation symbolique (c’est-à-dire lisible par l’humain) des problèmes, représentation de haut niveau, compréhensible, explicite et explicable. Les exemples précédents peuvent être classés dans cette catégorie. L’intelligence artificielle symbolique du milieu des années 1980 est aujourd’hui affublée du sobriquet GOFAI (« Good Old Fashioned Artificial intelligence » ou Bonne vieille intelligence artificielle).

Au cours de la dernière décennie, on a assisté à un retour en force de toute une série de techniques digitales, souvent inspirées par la biologie, mais prenant également en compte les avancées dans les domaines de la science statistique et de l’apprentissage machine numérique. L’apprentissage numérique repose sur des représentations numériques de l’information à traiter. Un exemple emblématique de ce genre de techniques peut se trouver dans les réseaux de neurones artificiels. Cette technique avait déjà été utilisée au cours des années 1960, avec le perceptron inventé en 1957 par Frank Rosenblatt, qui permettait un apprentissage supervisé de classifieurs (un algorithme permettant de décider si l’entrée qu’on lui présente appartient à une catégorie donnée). Par exemple, un perceptron peut être entraîné à reconnaître les lettres de l’alphabet écrites à la main (ici les catégories sont les lettres de l’alphabet). On entre dans le système une matrice de pixels contenant une lettre à reconnaître, et le système donne comme résultat la lettre reconnue. Au cours de la phase d’apprentissage, de nombreux spécimens de chaque lettre sont présentés et le système est ajusté pour donner la classification correcte. Une fois l’apprentissage terminé, une matrice de pixels peut être présentée contenant une lettre qui ne fait pas partie des spécimens utilisés pour l’apprentissage, et le système reconnaît correctement la lettre.

Selon l’époque, le paradigme dominant de l’intelligence artificiel a fluctué. Dans les années 1960, l’apprentissage machine était à la mode. Mais à la fin de cette décennie, un fameux article a mis un frein à ce champ de recherche, en montrant que les perceptrons n’étaient pas en mesure de classifier tous les objets. Cela était dû à son architecture, réduite à une couche unique de neurones. On verra plus loin que des classes plus complexes de spécimen peuvent être reconnues en augmentant le nombre de couches de neurones. Malheureusement, il n’existait alors aucun algorithme d’apprentissage pour entraîner des réseaux multicouches.

Un tel algorithme apparaît dans les années 1980 mais il est encore terriblement lourd à implémenter et l’on se rend également compte que l’apprentissage d’un réseau multicouche exige une masse faramineuse de données.

![]()

L’apprentissage machine (machine learning)

Au début des années 2000, les algorithmes progressent encore, les machines sont de plus en plus rapides, et l’on a un accès de plus en plus important à nombre de bases de données d’exemples, résultat du développement de toutes les techniques digitales. Cette conjonction favorable a relancé la mode des techniques numériques d’apprentissage machine, et on peut entendre dorénavant à tout bout de chant l’expression deep learning ou apprentissage profond (le terme « deep » ou « profond » fait référence à l’épaisseur des couches du réseau à entraîner).

La contribution de ces nouvelles techniques d’apprentissage digitales est considérable. Elle permet par exemple de générer des sons directement, et non pas via une partition (le signal sonore étant bien plus riche en informations, son apprentissage nécessite de nombreuses couches et d’innombrables heures de musique enregistrée). Voici quelques spécimens de sons instrumentaux reconstitués grâce à ces techniques.

Contrebasse

original

Wavenet

Glockenspiel

original

Wavenet

Bien sûr, ces techniques ont été appliquées à la composition, et on trouve de nombreux exemples de chorals de Bach. Voici un exemple d’une pièce d’orgue produite par un réseau de neurones (folk-rnn), puis harmonisée par un autre réseau de neurones (DeepBach).

Et voici un autre exemple de ce qui a pu être accompli (avec folk-rnn) en entraînant un réseau avec 23.962 chansons traditionnelles écossaises (à partir de transcriptions MIDI).

L’un des enjeux soulevés par l’apprentissage machine est celui des données utilisées pour ledit apprentissage. Pour des raisons rarement évoquées, et malgré les nombreuses recherches, académiques ou non, la compréhension musicale est un processus profondément complexe et relationnelle. La musique est un objet élusif, chargé de polysémies, de questions insolubles et de contradictions. Des pans entiers de la philosophie, de l’histoire de l’art et de la théorie des médias sont consacrés à la mise en évidence de toutes les nuances qui entrent dans la relation ambiguë entre musique, émotion et sens. Les mêmes questions hantent le champ des images mais celles-ci peuvent souvent être abordées sous l’angle de la représentation. La musique, plus abstraite, reste plus insaisissable.

Les enjeux économiques ne sont en outre jamais bien loin. Une entreprise comme AIVA a ainsi organisé un concert (au Louvre Abou Dabi) avec au programme cinq courtes pièces composées par son système et interprétées par un orchestre symphonique. On peut aussi citer l’exemple d’une pièce composée spécifiquement pour la fête nationale du Luxembourg en 2017, et celui de cet extrait d’un album de musique chinoise :

Mais attention, seule la mélodie est effectivement générée par l’ordinateur. Le travail d’orchestration, d’arrangement, et le reste, a été réalisé par des humains. C’est aussi vrai de nombre de systèmes qui se prétendent des machines de composition automatique, y compris la Symphonie inachevée de Schubert, complétée par un smartphone Huawei.

![]()

De la composition automatique au compagnonnage musical

Générer de la musique automatiquement avec un ordinateur est probablement assez peu intéressant pour un compositeur (ainsi que pour l’auditeur). Mais les techniques mentionnées peuvent être utilisées pour résoudre des problèmes compositionnels ou pour développer des performances d’un nouveau genre. Un exemple, dans le domaine de la composition, serait de produire une interpolation entre deux rythmes A et B (donnés au début de l’enregistrement).

Un autre exemple, dans le cadre compositionnel, est l’aide à la résolution de problèmes d’orchestration. La famille de logiciels Orchid, initiée par l’équipe RepMus de Gérard Assayag à l’Ircam, propose une partition d’orchestre produisant un résultat sonore le plus proche possible d’un « son » donné comme cible, en entrée. La dernière itération du système, Orchidea, développée par Carmine-Emanuele Cella, compositeur et chercheur à l’université de Berkeley, donne des résultats non seulement intéressants, mais utiles.

Une cloche archeos originale et son imitation orchestrale

Des gouttes d’eau et leur reproduction orchestrale

Un coq et son homologue musical

Loin d’une approche de remplacement, où l’IA se substitue à l’humain, ces nouvelles techniques suggèrent la possibilité d’un compagnonnage musical.

C’est l’objectif de la famille de systèmes OMax, développé à l’Ircam, toujours par l’équipe de Gérard Assayag. OMax et ses équivalents ont été mis en œuvre partout dans le monde, avec de grands artistes, et nombre de vidéos de performances et de concerts publics témoignent des capacités du système. Ces systèmes implémentent des agents qui produisent de la musique en navigant avec créativité dans une mémoire musicale apprise en amont ou durant la performance. Ils offrent une vaste palette de styles de « composition » ou de « jeu ».

- Un exemple conçu et développé par Georges Bloch avec Hervé Sellin au piano, auquel Piaf et Schwatzkopf répondent sur le thème de The Man I Love. La deuxième partie de la vidéo met en œuvre un agent réactif qui écoute le saxophoniste Rémi Fox, et qui joue en réponse des phrases musicales enregistrées en direct peu avant ou durant la même performance.

- Dans cet autre exemple, un saxophone virtuel dialogue avec un saxophone humain en temps réel, en suivant la structure d’une musique funk. La dernière version d’OMax développée par Jérôme Nika, chercheur à l’Ircam, combine une notion de « scénario musical » préétabli à une écoute réactive, pour naviguer dans la mémoire musicale. Dans les trois courts extraits suivants, le système répond au saxophone de Rémi Fox en se concentrant

□ d’abord sur le timbre (du début jusqu’à la 28e seconde)

□ puis sur l’énergie (29 sec – 57 sec)

□ enfin sur la mélodie et l’harmonie (58 sec jusqu’à la fin)

Le type de stratégie utilisé pour co-improviser dans le dernier exemple a également été mis en œuvre dans Lullaby experience, projet développé par Pascal Dusapin utilisant une collection de berceuses collectées auprès du public via Internet. Il n’y a ici nulle improvisation. Le système est utilisé pour produire du matériau dont s’empare ensuite le compositeur pour l’intégrer à l’orchestre.

Un dernier exemple où l’IA offre une assistance à la compositrice ou au compositeur, plus qu’elle ne substitue à elle ou lui, nous vient de La Fabrique des Monstres de Daniele Ghisi. Le matériau musical de la pièce est le résultat donné par un réseau de neurones à différentes étapes de son apprentissage de divers corpus. Au début de son processus d’apprentissage, la musique générée est rudimentaire, mais, à mesure qu’il progresse, on reconnaît de mieux en mieux les structures musicales archétypales. Dans une mise en abîme poétique, l’humanisation de la créature de Frankenstein se reflète dans l’apprentissage de la machine :

Un passage remarquable, intitulé StairwayToOpera, offre comme une « synthèse » des grands moments caractéristiques des arias opératiques.

![]()

En guise de conclusion, nécessairement provisoire

Ces exemples montrent que, bien que ces techniques soient capables de fabriquer de la musique qui n’est pas (souvent) très intéressante, elles peuvent aussi offrir de nouveaux modes d’interaction, de nouvelles dimensions créatives, et renouveler au passage notre approche de certaines questions fascinantes et encore irrésolues :

"Comment une musique émouvante peut-elle sortir d’un logiciel qui n’a jamais entendu une note, jamais vécu un instant de vie, jamais éprouvé quelque émotion que ce soit ?", Douglas Hoffstader

CNRS – STMS lab, IRCAM, Sorbonne Université, Ministère de la Culture