Présenté du 4 octobre 2024 au 2 février 2025 à la Serpentine North Gallery de Londres au sein d’une exposition monographique consacrée au duo d’artistes Holly Herndon et Mat Dryhurst, The Call se manifeste sous la forme de deux installations parfaitement symétriques séparées par un rideau. Dans chacune d’elle, un micro suspendu au plafond offre aux visiteur.euse.s la possibilité de générer par leurs propres chants, improvisés ou non, un hymne dans le plus pur style choral sacré britannique. Réalisée en grande partie grâce à l’apprentissage profond sur une vaste base de données entièrement produites pour l’occasion, The Call se veut aussi une réflexion toute aussi profonde sur l’IA et ses implications dans la société en général. Regards croisés en deux volets. D’abord avec les deux créateurs et Robin Meier, le réalisateur en ingénierie musicale qui (en duo avec Matéo Fayet) accompagne le projet.

Holly, Mat : quel regard portez-vous sur l’utilisation de l’intelligence artificielle dans le cadre de la création artistique ?

Holly Herndon & Mat Dryhurst : Les systèmes d’IA viennent s’ajouter à la boîte à outils déjà bien remplie de la création artistique. On peut ainsi avoir recours à divers modèles pour la formalisation d’idées, pour générer du matériau ou pour créer des effets, au sein d’un cadre de travail plus familier. Nous souhaitons quant à nous élaborer nos propres modèles et nos propres façons d’interagir avec eux, car nous avons l’impression que nous n’avons encore que survolé l’étendue des possibles ouverts par l’adoption à grande échelle de ces technologies dans les domaines de la culture et de l’art. Les modèles sont à mi-chemin entre un jeu collaboratif, un instrument, des documents d’archive et un déguisement à porter. L’important est de comprendre comment ils fonctionnent et ce qu’on peut leur demander de plus au service de nouveaux types d’art.

Dans cette perspective, comment ce projet particulier aborde-t-il le sujet ?

H.H. & M.D. : L’idée a été de créer un recueil de chansons destinées à être travaillées puis enregistrées par un certain nombre de chœurs à travers tout le Royaume-Uni. Ces enregistrements ont constitué une base de données dont nous avons voulu faire entendre la teneur, via un modèle IA de génération de chœurs. Dans The Call, nous exposons donc un protocole d’entraînement. Nous essayons de jouer sur tous les aspects de la création d’un modèle d’IA.  Nous avons d’abord composé un recueil de chansons qui couvre tout le spectre de la langue anglaise. Ce qui signifie que, si vous en chantez toutes les chansons, vous obtiendrez suffisamment de données pour entraîner de manière exhaustive un modèle de génération de chansons sur la base de votre voix.

Nous avons d’abord composé un recueil de chansons qui couvre tout le spectre de la langue anglaise. Ce qui signifie que, si vous en chantez toutes les chansons, vous obtiendrez suffisamment de données pour entraîner de manière exhaustive un modèle de génération de chansons sur la base de votre voix.

Robin Meier : Ce recueil a été généré avec l’aide de Ken Deguernel, ancien doctorant de l’Ircam, qui travaille aujourd’hui au sein de l’équipe de recherche Algomus attachée à l’Université de Lille. Avec un autre chercheur, Mathieu Giraud, et l’un de leurs étudiant.e.s, ils ont mis au point un modèle d’IA de génération symbolique de partition. Le modèle utilisé a été entraîné sur une base de données de chansons populaires dans laquelle on trouve par exemple The Sacred Harp, un recueil de musique chorale sacrée qui prend sa source en Nouvelle-Angleterre et s’est développée dans le sud des États-Unis au XIXe siècle.

Ce recueil devant servir à entraîner un autre modèle d’IA, destiné à générer de nouveaux chants choraux, et développé cette fois par l’équipe Analyse et synthèse des sons – avec notamment le chercheur Nils Demerlé – l’enjeu majeur est le suivant : que faut-il chanter pour que ladite IA fonctionne ? Comment créer un jeu de données adapté à l’apprentissage des chœurs, des harmonies, des couleurs ? Plus généralement, sachant que tout ce qui se produit quotidiennement aujourd’hui – sons, textes, vidéos – finira un jour dans une base de données pour être dévoré par des systèmes automatisés, comment nourrir la machine pour qu’elle produise ce que l’on veut ?

H.H. & M.D. : Ce jeu d’enregistrements de chorales, réalisé dans tout le Royaume-Uni, a donc servi à entraîner une série de nouveaux modèles. Nous avions déjà travaillé sur un outil de « transfert de timbre »1 vocal, mais il ne permettait que des entrées et des sorties monophoniques. Travailler avec les chercheurs de l’Ircam pour en faire un outil polyphonique a été une énorme avancée. Nous avons élaboré un modèle interactif grâce auquel un membre du public peut, en chantant, parcourir tous les chants choraux échantillonnés. Un autre modèle est capable, via un prompt (instruction envoyée à une IA), de générer de nouvelles compositions chorales complètes à partir de ces mêmes données d’entraînement.

Par ce travail avec des chorales amateures, vous avez également eu à cœur, dans The Call, d’aborder les diverses questions que soulève l’utilisation d’une quantité faramineuse de données par les modèles d’IA.

H.H.&M.D. : The Callest en effet l’occasion pour nous de poursuivre notre réflexion sur l’origine des jeux de données, en travaillant avec Serpentine à un dispositif permettant aux chœurs participants d’être copropriétaires de leurs propres données et de déterminer leur avenir.

H.H.&M.D. : The Callest en effet l’occasion pour nous de poursuivre notre réflexion sur l’origine des jeux de données, en travaillant avec Serpentine à un dispositif permettant aux chœurs participants d’être copropriétaires de leurs propres données et de déterminer leur avenir.

Les grands modèles d’IA exigent une quantité faramineuse de données pour bien fonctionner, ce qui leur confère inévitablement une valeur collective. Nous essayons d’encourager une réflexion sur ce que suppose une contribution à un projet qui nous dépasse, pour le bénéfice de tous. L’émergence, c’est-à-dire la naissance d’un tout plus grand que la somme de ses parties, est un concept propre à la fois à l’IA et à la musique chorale. Le Graal dans un cas comme dans l’autre. Expérimenter autour de ces idées est plus facile dans le domaine artistique que dans un domaine plus sensible comme celui de la santé, mais la question de savoir comment administrer et redistribuer les richesses potentielles des modèles d’IA nous semble essentielle à considérer.

R.M. : La question se pose en effet de savoir à qui appartient le jeu de données, et à qui appartient ce qui est généré avec. Holly et Mat adoptent une approche critique de la technologie, à la fois dans leurs discours, et dans leurs pratiques. Je distingue souvent deux catégories parmi les projets : ceux qui sont créés avec l’IA, et ceux qui traitent du sujet de l’IA. The Call allie les deux, s’appuyant sur des outils de pointe, tout en poussant loin la réflexion sur les enjeux éthiques et créatifs.

H.H.&M.D. : Nous avons essayé de montrer que le processus d’entraînement d’un modèle sur des données dans un contexte spécifique peut constituer une nouvelle pratique artistique. Même si les modèles obtenus ne feront que produire les fruits de cet ensemble de données, permettre au public de chanter avec ce modèle, de se joindre au chœur, leur fera franchir un nouveau cap. Bien que nous ressentions un certain sentiment d’appropriation vis-à-vis de ce processus, ce qui est réellement intéressant dans la formation et le partage des modèles, c’est que l’art appartient en quelque sorte à la fois à tous ceux qui ont contribué aux données et à l’élaboration de l’instrument, et à celles et ceux qui utilisent le modèle. C’est une façon différente de travailler !

Propos recueillis par Jérémie Szpirglas

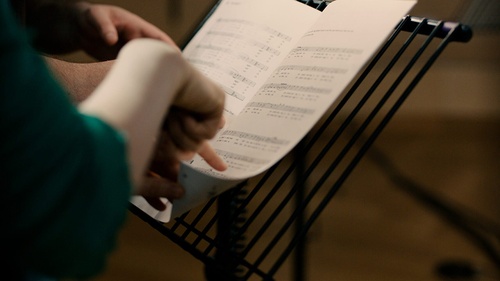

Photos : Holly Herndon et Mat Dryhurst lors d'une session d'enregistrement avec la London Contemporary Voices à Londres, 2024. Avec l'aimable autorisation de Foreign Body Productions.![]()

1. Le transfert de timbre consiste à générer automatiquement une forme d’onde en fonction d’une source, reproduisant son profil mais en changeant son timbre (par exemple, un violon en entrée, une flûte en sortie)