Directeur de recherche à l’Ircam où il est responsable de l’équipe Interaction son musique mouvement, Frédéric Bevilacqua travaille sur l’interaction gestuelle et l’analyse du mouvement appliqués à la musique et au spectacle vivant.

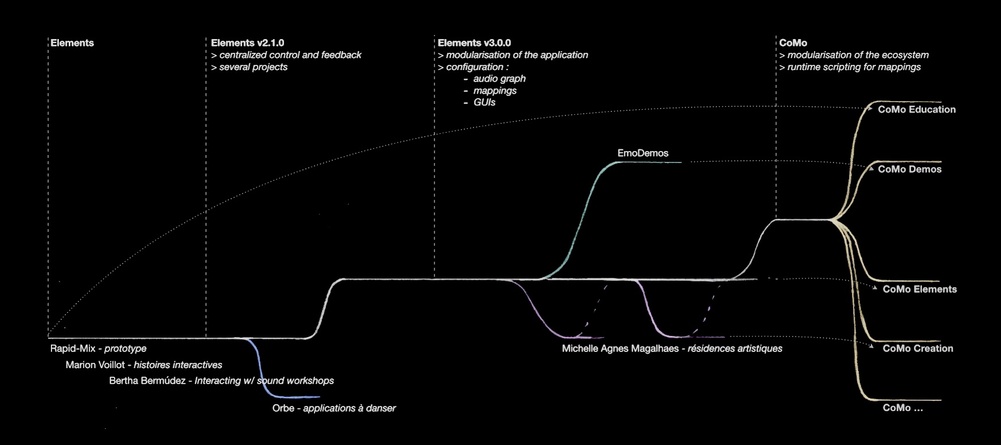

Connus pour leurs recherches sur les interfaces musicales et le développement de nouveaux instruments de musique numériques pour lesquels ils ont reçu de nombreux prix d’innovation la dernière décennie, Frédéric Bevilacqua et son équipe ont récemment franchi un nouveau cap en développant l’écosystème web open-source CoMo qui a donné naissance à une nouvelle génération d’outils multimodaux et collaboratifs, d’un accès plus facile. Un champ d’applications inédit s’est alors ouvert pour les recherches sur l’interaction sonore, dans des domaines aussi variés que l’éducation et la santé, qui furent abordés dans le cadre du projet ANR Element s’achevant en 2022.

Pour en savoir plus sur les nouveaux enjeux de l’interaction sonore, nous sommes allés à la rencontre du chercheur dans ses bureaux du laboratoire Sciences et technologies de la musique et du son, à l’Ircam.

Frédéric, tu t’es spécialisé dans les systèmes interactifs musicaux, peux-tu nous expliquer en quelques mots l’objet de tes recherches ?

Avec mon équipe, nous nous intéressons aux possibilités d’intégrer les gestes et le corps dans nos interactions avec l’informatique, en particulier l’informatique musicale. En prenant en compte les concepts d’interaction incorporée (embodied interaction en anglais), nous proposons de nouvelles formes et des outils innovants pour écouter et jouer de la musique.

Cela inclut, d’une part, la possibilité d’utiliser des interfaces tangibles ou des capteurs de mouvements, pour actionner, transformer, explorer des espaces sonores. Comme nous savons des neurosciences que nos facultés de perception et d'action sont intimement liées, ces approches pour jouer des sons et de la musique peuvent également être considérées comme de l’écoute active.

D’autre part, nous nous intéressons également aux aspects d’interactions collectives et sociales, qui font entièrement partie du concept d’interaction incorporée : notre environnement et le contexte doivent être pris en compte. L'expérience de la musique, de l’écoute à la performance, se construit graduellement de manière collective et sociale.

En dix ans, ton équipe est passée de la conception de prototypes hardware – les Modulars Musical Objects (MO) – au développement de plateformes web et d’applications en ligne (CoMo). Pourquoi avoir pris ce tournant ?

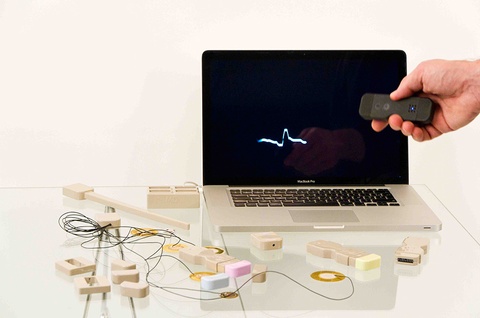

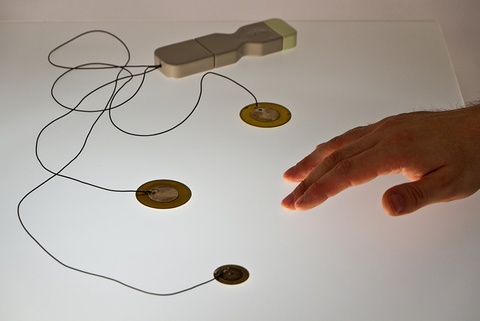

Les Modulars Musical Objects (MO) ont eu un impact important, et ont été utilisés lors de nombreuses créations artistiques sous différentes formes et esthétiques. Ils ont également eu une reconnaissance internationale en remportant le prix Guthmann des nouveaux instruments de musique, ou faisant partie de l'exposition Talk to Me au MoMA de New York.

Exemples de Modulars Musical Objects © NoDesign

Cependant, ces nouveaux « objets numériques », créés en collaboration avec NoDesign, étaient des prototypes difficilement duplicables et maintenables, dû aux technologies que nous utilisions il y a une dizaine d’années. Par la suite, nous avons fait un pas avec la commercialisation par PluX des capteurs de mouvements RIoT-BITalino, initialement créés par Emmanuel Fléty à l’Ircam. Mais un tournant important est sans doute le fait d’utiliser des technologies web qui permettent de connecter un grand nombre de dispositifs disponibles, dont nos téléphones portables, ce qui avait été initié lors de notre projet CoSiMa.

Les MO étaient des instruments de musique innovants. Mais que signifie CoMo ?

Notre intérêt est de pouvoir mettre en œuvre des interactions collectives et collaboratives à grande échelle. L’intérêt des technologies web réside justement dans le fait qu’elles fournissent des standards permettant de pouvoir connecter de nombreux capteurs et matériel informatique de toute sorte, des nano-ordinateurs ou portables, même s’ils ne sont pas directement sur internet. L'infrastructure matérielle et logicielle est adaptée pour créer des interactions avec des dizaines ou des centaines d’ « objets » connectés à un réseau wifi. Nous avons donc fait évoluer les Musical Objects (MO) à des « Collective Musical Objects» que nous avons appelés CoMo.

CoMo est en fait une collection d'applications qui utilisent toutes la même base logicielle développée par Benjamin Matuszewski, avec des contributions de Jean-Philippe Lambert et Jules Françoise. Ces applications permettent de connecter des capteurs de mouvement, des modules d’analyse et de reconnaissance de mouvement avec de la synthèse sonore.

- L’application la plus générique est CoMo-Elements qui peut être utilisée avec des téléphones portables, en mettant à profit les capteurs de mouvement intégrés de tous les smartphones. CoMo-Elements permet d’associer des sons à des postures et des mouvements. Elle est couramment utilisée pour des pièces musicales et des workshops, notamment avec des danseurs/danseuses.

- CoMo-Education, conçue par Marion Voillot (designeuse, et doctorante dans notre équipe et au CRI Université de Paris Cité), est une version spécifique destinée aux écoles. Cette application permet de raconter des histoires en mouvements, en générant un univers sonore qui est «joué» par les enfants.

- CoMo-Rééducation est une application, dont la conception a été réalisée lors de la thèse d’Iseline Peyre, pour un programme d’auto-rééducation à domicile et avec de la musique interactive, pour des patients ayant subi un AVC.

- Enfin, CoMo-Vox est une application développée en partenariat avec Radio France pour l’apprentissage de gestes simples de direction de chœur.

Photo : CoMo-Rééducation, dispositif de sonification du mouvement d’auto-rééducation (thèse d’Iseline Peyre). credit photo © Mikael Chevallier

Photo : CoMo-Rééducation, dispositif de sonification du mouvement d’auto-rééducation (thèse d’Iseline Peyre). credit photo © Mikael Chevallier

Ton équipe a aussi multiplié les collaborations artistiques ces dernières années, pour des installations interactives (Biotope de Jean-Luc Hervé, Square de Lorenzo Bianchi), des performances collectives (de Chloé, Michelle Agnes Magalhaes, Aki Ito, Garth Paine) ainsi que des dispositifs d’action culturelle pour le jeune public (Maestro, Maesta à la Philharmonie des enfants qui permet aux enfants de se mettre dans la peau du chef d’orchestre). Quelles leçons tire la science de ces expériences musicales ?

C’est une question intéressante, car je ne pense pas que l’on puisse parler de science au singulier. Nous sommes réellement à des intersections entre plusieurs manières de concevoir la recherche scientifique, entre des connaissances qui concernent la technologie mais également l’humain. Nous sommes véritablement aux croisements de l’informatique, des sciences cognitives, du design et des sciences humaines et sociales, qui ont toutes des méthodologies différentes. Nous étudions, par exemple, comment nous pouvons apprendre ou réapprendre des mouvements guidés par les sons, et comment implémenter des dispositifs techniques pour le mettre en œuvre. Mais nos dispositifs ne sont pas de simples applications techniques, nous nous intéressons aux questions d’appropriation, d’utilisabilité et de créativité que ces dispositifs stimulent. Chaque situation implique des contextes différents que nous devons prendre en compte, et qui constituent en soi des champs de recherche. Par ailleurs, nous nous intéressons de plus en plus aux questions éthiques et de soutenabilité de nos recherches.

La compositrice Michelle Agnes Magalhaes, et l'Ensemble soundinitiative, performance Constella(c)tions à l'Ircam, 2019 © Valentin Boulay

Aujourd’hui, de nouveaux champs d’application de tes recherches s’ouvrent dans les domaines de l’éducation et la santé. Pourquoi d’autres secteurs se sont-ils intéressés aux interactions entre le geste et le son ?

L’apprentissage est modulé par nos perceptions, actions et émotions. De nombreuses approches pédagogiques proposent de prendre en compte le corps, les mouvements et les interactions collectives. De nombreux scénarios que nous avons imaginés sont directement mis à profit pour apprendre à être attentifs aux autres, pour apprendre des mouvements, ou simplement développer l’écoute. CoMo-Education, qui permet de raconter des histoires en mouvement et musique, dont j’ai déjà parlé, est un très bon exemple qui a été conçu en collaboration avec des enseignants. Concernant la santé, les sons et la musique permettent de créer des applications ludiques et motivantes, qui nous semblent une des clés pour la rééducation à domicile. Mais de nombreuses autres applications sont possibles. Nous avons par exemple été approchés pour travailler avec des personnes en situation de handicap.

Le projet ANR Element qui vient d’être mené avec le LISN, le CNRS et Paris-Saclay promouvait le développement d’interactions humain-machine plus complexes et expressives dans les interfaces basées sur le mouvement. À quelles questions de recherche fondamentale le projet a-t-il permis de répondre ?

Nous avons poursuivi nos recherches sur les notions d’apprentissage et d'aptitude des interfaces numériques. Comment pouvons-nous apprendre à maîtriser de nouvelles interfaces, en particulier des interfaces gestuelles. Les notions d’intuitivité sont souvent invoquées dans le monde industriel comme argument de vente, mais ne sont pas si simples à mettre en place. Une des questions fondamentales qui nous anime est plus particulièrement comment adapter chaque interface aux besoins des utilisateurs. Il s’agit de comprendre les diverses communautés d’utilisateurs et utilisatrices, leurs besoins et aptitudes, et de concevoir des interactions et outils qui puissent s'adapter aux différentes situations.

Quelles furent les avancées scientifiques qu’il a permises ? Et ses applications concrètes ?

D’une part, nous développons la notion de « design de gestes et mouvements », à savoir développer des méthodologies et outils pour les interactions gestuelles. On peut voir cela comme une nouvelle discipline, de manière similaire à celle du design sonore qui s’est développée il y a plusieurs années. Nous développons des outils qui permettent aux utilisateurs de définir leurs propres gestes en fonction du contexte et de leurs aptitudes. Toutes les applications CoMo dont nous avons parlé représentent des applications concrètes issues du projet ELEMENT et sont fondées sur cette approche. Nos partenaires (LISN et ISIR) ont également développé une nouvelle plateforme pour l’apprentissage automatique qui s'appelle Marcelle, qui donne la possibilité aux utilisateurs de construire et tester collectivement des modèles de reconnaissances de formes et de mouvement. Notre philosophie est donc de donner un maximum de possibilités aux utilisateurs de pouvoir s'approprier, de contrôler et modifier les contenus des systèmes d’apprentissage automatique.